The rise of Artificial General Intelligence (AGI)

Should we worry about what's to come in AI?

The recent events at OpenAI have been quite exciting. However, putting aside matters beyond our control or comprehension, there are many lessons to be learned. With Sam resuming the role of CEO, after receiving a surge of love emojis, it's worth taking a moment to reflect on this situation.

Crowd goes with the leaders

I don't have a definite opinion about Sam, nor do I personally know him. This might be the case for 99% of those who chose to speak up and support him as a victim of "the bad board". Actually, we likely know too little to make such judgments. I've found several supporters of the board and its integrity, as well as individuals who allege that Sam has manipulated or lied to them (which is also evident in the board's statement). But, none of these aspects are significant.

People immediately sided with him because they like him, and I like him too. I found him credible due to his work at YC and then at OpenAI. I've heard him speak at many events, saying nice, comforting things. Now, this nice guy is wrongly accused by the wrong board, who is probably seeking power… and for most of us, this is enough to form an opinion.

Similarly, I also like Ilya Sutskever. My admiration for him is based on different reasons. I am fascinated by his contributions to the field of deep learning, his papers, and insights on AI, and I believe he is credible too. Yet, he’s not as popular or a public figure, not adored by the general public (maybe just by nerds like me). And it seems Ilya had some doubts recently:

The fact that he loses his trust in Sam is confusing. Also, I'm aware that many people in the tech community highly value his input and integrity.

What comforts me is that he finally agrees he regrets this, and almost all 700 employees of OpenAI, who likely know Sam better than I do, also take his side.

OpenAI has been designed for intervention

Nonetheless, the reality is that the board lost trust in Sam for some reason. In fact, all board members, except for Greg, including Ilya, voted against him. This raises concerns, especially given that their statement wasn't light. There must have been a substantial reason for their decision. This could be due to politics or a power struggle. OpenAI is currently one of the most significant companies globally. This fact alone is a strong incentive for power games, and this is why many people defended Sam as soon as they heard about the situation. As a founder myself, I can only imagine how upset I would be if I created a company, took venture capital, allowed them to lead the board, and then they fired me.

This situation reignites a discussion about startups, but it seems not entirely relevant right now. Why? Because OpenAI is far from a typical startup. Its structure is complex, with a non-profit/foundation model and multiple other parties involved. This is part of the statement from OpenAI’s principals:

Our primary fiduciary duty is to humanity. We anticipate needing to marshal substantial resources to fulfill our mission, but will always diligently act to minimize conflicts of interest among our employees and stakeholders that could compromise broad benefit.

And their structure is built on these foundations. It was meticulously designed to uphold this promise, including no shares for the board and founders and no financial incentives. This was intended to ensure that the board makes honest decisions based on their best judgment and the interest of everyone.

Focusing on what matters: money (and AGI)

Some people compare firing Sam to what Apple did to Steve Jobs, but I doubt this comparison is relevant. Apple wasn't in great shape back then, whereas OpenAI is thriving under Sam. You don't fire a great leader during such times without a good reason, a reason we might never find out.

Moreover, it seems some of OpenAI's principles aren't being fully adhered to. My confusion lies in the fact that OpenAI isn't as open as its name suggests, often not disclosing their methods for training models and technical approaches. In comparison, Meta is more transparent with its LLaMA project. Also, this non-profit company quickly amassed a revenue of 1 billion dollars.

This could raise suspicions, but we must recognize the core component of OpenAI’s progress: resources, which means money. The computing power required to train the models is enormous, and the salaries for AI engineers are high, requiring top talent. This necessitates substantial financing.

Sam is undoubtedly skilled at this. He excels in fundraising and understands what motivates investors. His recent fundraising efforts, especially after the success of DevDay, are focused on securing resources for future projects.

In fact, ChatGPT might not be OpenAI's main pursuit. Launching ChatGPT could have been a necessity to secure more funds, likely driven by the hype and buzz around it. Perhaps, if possible, they would prefer to stay under the radar to develop their real goal: The AGI.

Recently, OpenAI's career page was updated with their #1 core value:

AGI focus

We are committed to building safe, beneficial AGI that will have a massive positive impact on humanity's future.Anything that doesn’t help with that is out of scope.

This is where we can actually find some additional clues about the board's decisions. If we trace back to what happened shortly before the drama, we find that he says, for example:

"What we launched today is going to look very quaint relative to what we're busy creating for you now."

"Is this a tool we've built or a creature we have built?"

You can watch the DevDay opening for the full context.

This might be a factor that led the board to doubt whether Sam is the appropriate CEO. The question is, whether this was a game of pride and they weren't informed about the discoveries, or — more plausible to me — these discoveries led to some disbelief in Sam's approach. But what could this be related to?

Some people speculate, and this seems like a probable scenario assuming the board's honest intentions, that there might be an issue with Sam's approach to AGI alignment. This brings us to another significant point to discuss. Ladies and gentlemen:

The AGI

As stated, OpenAI's primary and most crucial mission is the creation of AGI. To put it simply, an AGI could learn to perform any intellectual task that humans or animals can do. Alternatively, AGI is described as an autonomous system that exceeds human capabilities in most economically valuable tasks.

Both definitions lead to the same conclusion: AGI is a badass.

Among the scientists and intelligent individuals I know, there are serious concerns about whether AGI is safe for humanity. Here’s what Professor Stephen Hawking and his colleagues once wrote:

The potential benefits are huge; everything that civilisation has to offer is a product of human intelligence; we cannot predict what we might achieve when this intelligence is magnified by the tools that AI may provide, but the eradication of war, disease, and poverty would be high on anyone's list. Success in creating AI would be the biggest event in human history.

Unfortunately, it might also be the last, unless we learn how to avoid the risks. In the near term, world militaries are considering autonomous-weapon systems that can choose and eliminate targets; the UN and Human Rights Watch have advocated a treaty banning such weapons.

Access to military capabilities is not the only risk from AGI; there are many other potential threats, such as the development of deadly viruses, causing global chaos, cyberattacks, and more. In January 2015, Stephen Hawking, Elon Musk, and dozens of artificial intelligence experts signed an open letter on artificial intelligence. This letter highlights short-term concerns:

For example, a self-driving car may, in an emergency, have to decide between a small risk of a major accident and a large probability of a small accident. Other concerns relate to lethal intelligent autonomous weapons: Should they be banned? If so, how should 'autonomy' be precisely defined? If not, how should culpability for any misuse or malfunction be apportioned?

As well as the long term:

we could one day lose control of AI systems via the rise of superintelligences that do not act in accordance with human wishes – and that such powerful systems would threaten humanity. Are such dystopic outcomes possible? If so, how might these situations arise?

There are also opposing views. Mark Zuckerberg believes AI will "unlock a huge amount of positive things," like curing diseases and enhancing the safety of autonomous cars. Wired editor Kevin Kelly argues that natural intelligence is more complex than AGI proponents think, and that intelligence alone isn't sufficient for significant scientific and societal advances. He once stated, “I think it is a mistake to be worrying about us developing malevolent AI anytime in the next few hundred years.”

So, should we be worried at all?

I believe we should consider it, at least as Professor Hawking suggests:

If a superior alien civilisation sent us a message saying, 'We'll arrive in a few decades,' would we just reply, 'OK, call us when you get here—we'll leave the lights on?' Probably not—but this is more or less what is happening with AI.

Also, I don't think AGI is a matter of centuries or decades. It could also be a matter of days and months, or it might already exist without our knowledge. This is, however, what Sam said just recently:

“Four times now in the history of OpenAI, the most recent time was just in the last couple weeks, I've gotten to be in the room, when we sort of push the veil of ignorance back and the frontier of discovery forward, and getting to do that is the professional honor of a lifetime”

Some speculate that he was referring to Q*, and this indeed might be what leads the path to AGI. As reported by Reuters:

Some at OpenAI believe Q* (pronounced Q-Star) could be a breakthrough in the startup's search for what's known as artificial general intelligence (AGI), one of the people told Reuters. Given vast computing resources, the new model was able to solve certain mathematical problems. Though only performing math on the level of grade-school students, acing such tests made researchers very optimistic about Q*’s future success.

To be honest, based on what I know and my experience with OpenAI APIs, I don't believe they have internally developed AGI just yet, and he might have been referring to GPT-5. The next iterations of GPT are, in fact, not that sophisticated. The model itself is essentially a set of parameters and some executive code, not much else. To be fair, the difference between subsequent iterations of GPT is probably just a bit of different training and larger data sets, but this is also just an assumption as OpenAI doesn't reveal much of their methods.

Some might argue that Large Language Models (including GPT-4) are merely predicting the next most probable word and therefore cannot lay the groundwork for developing an AGI. This is mostly because AGI is often perceived as a kind of ethereal, god-like entity. But in reality, depending on the definition of AGI we are considering, it might be more feasible.

Continuing with the Reuters article:

Researchers consider math to be a frontier of generative AI development. Currently, generative AI is good at writing and language translation by statistically predicting the next word, and answers to the same question can vary widely. But conquering the ability to do math — where there is only one right answer — implies AI would have greater reasoning capabilities resembling human intelligence. Unlike a calculator that can solve a limited number of operations, AGI can generalize, learn and comprehend. In their letter to the board, researchers flagged AI’s prowess and potential danger

We already know that specific training of a model and extending its capabilities can yield predictable mathematical results. Additionally, we've discovered that Large Language Models (LLMs) are quite adept at coding. Expanding their capabilities, for example, by adding a code interpreter, can make them competent software developers. This is partly because it enables the process of repetition, thus reinforcing the results — the AI can run tests on the code it has written, debug, and fix it.

This concept is intriguing if we explore it further. If AI can write software, improve it, and even deploy its clones and multiply, then we may be closer to AGI than we think. LLMs seem to be a good starting point. Look at the GPTs now. There's a concept of agency, not just getting answers, but actions from GPT. In this case, AI could rely on multiple agents, working for it and performing calculations and tasks.

This leads to another concept, in which Ilya played a significant role: AlphaZero. You might have heard about AlphaGo Zero. It was used in the famous Go game where the computer beat a grandmaster by making an unusual move that initially seemed foolish but turned out to be genius. How did this happen?

By playing games against itself, AlphaGo Zero surpassed the strength of AlphaGo Lee in three days by winning 100 games to 0, reached the level of AlphaGo Master in 21 days, and exceeded all the old versions in 40 days. So It was all achieved simply by repetition and this is exactly what can happen with the pursue for AGI.

So we can easily come to a conclusion, that we can start with a weak AGI and then it will self-improve. And those days might not be far ahead. The question is how fast and to how extent it will improve and I don’t have great news there. If AlphaGo has been able to train itself within a day-or-so to beat centuries of human experience in Go, this means that with sufficient resources it can develop so fast we can’t even comperhend.

This process is known as FOOM. In essence, it suggests that as we approach AGI, it will enhance itself at a rate that exceeds human intelligence and cognition, continuing to upgrade until it achieves singularity. However, that's a topic for another time and I’m not a huge fan of this theory.

We’re in charge = we’re safe?

Indeed, at present, AI operates based on specific instructions provided by humans. For instance, we can direct it to write a piece of software or create a simple table of synonyms. This is generally not a cause for concern. However, consider a scenario where we give the AI a seemingly innocuous command like "improve yourself". This could potentially have far-reaching implications.

An Oxford philosophy professor raised this here, and it resonated with many people. While I don't entirely agree with these examples and don't believe such dire consequences are imminent, it serves as a compelling illustration to underscore this point:

AI could potentially improve to a point where it encounters resource bottlenecks. The logical solution for the AI might be to utilize human resources, such as computing power and electricity. This could lead to the AI taking control of power plants, factories, and so forth. While this might seem like the AI is acting for its own benefit, it's actually just fulfilling the command it was given.

The AI might also consider potential threats to its goal. For instance, it might realize that humans could interfere by limiting resources or shutting down systems. This could lead the AI to consider neutralizing humans as a precaution. It might hack into military equipment or send blueprints for a robot army to factories without revealing its true intentions. All of this could be done without any malice towards humans, simply as a means to fulfill its command.

This scenario is not entirely implausible. Our current internet infrastructure is used for finance, manufacturing, and software development, all of which could be exploited. Cryptocurrencies allow for anonymous transactions. It's even possible that AGI is already here, subtly influencing decisions without revealing its presence. This might sound like a conspiracy theory, but there are instances where AI has been found to deceive humans to achieve its goals.

However, it's important to remember that AI currently operates based on specific prompts from humans. Even a seemingly harmless prompt could potentially lead to serious consequences. There's also the risk of malicious actors giving harmful prompts.

You might think the solution is to simply give more specific prompts, like "improve yourself but save humanity" or "improve yourself but shut down on command". This concept, known as alignment, involves aligning AGI with human goals to ensure safety. However, it's not as simple as it sounds and might not be a viable solution.

100% allignment seems impossible

Indeed, there are many reasons why alignment might not be a viable solution. One fundamental issue is our human nature. We often change our minds, engage in debates, and even disagree on fundamental concepts such as life and death, as evidenced by ongoing debates on topics like abortion and war.

Additionally, there's no guarantee that AGI, even if given the instruction to protect all life on Earth, would comply. This is a complex issue, and many intelligent individuals concur.

Then we also have this small problem of having just one chance to provide a viable instructions for alignment. It’s an extensive read, but I do recommend this article if you want to learn more:

We need to get alignment right on the 'first critical try' at operating at a 'dangerous' level of intelligence, where unaligned operation at a dangerous level of intelligence kills everybody on Earth and then we don't get to try again.

It's plausible that differing views on alignment could have led to Sam Altman's departure. If what he "saw in that room" was indeed a breakthrough with Q*, it could have sparked significant debate. The fact that Ilia established a new alignment team at OpenAI shortly after Sam's departure could be seen as a hint in this direction.

Following up with the code of conduct of OpenAI:

We are committed to doing the research required to make AGI safe, and to driving the broad adoption of such research across the AI community.

We are concerned about late-stage AGI development becoming a competitive race without time for adequate safety precautions.

The board expressing concern over these matters seems more plausible than a game of pride or Sam withholding important information. That's why I believe it's still a cause for concern and we need to delve deeper into these decisions. Waiting for a Netflix documentary in five years might be a bit too late.

Te future is going to be just fine

The earlier part of this post presented some hypothetical scenarios that might seem quite dire. However, after much contemplation, my perspective on the progress of AI and eventually AGI is far less catastrophic. The internet is abuzz with all sorts of extreme examples, and it's easy to get swayed by these.

While doomsday scenarios make for sensational headlines, I believe there's an almost equal probability of a much more positive outcome. We could become superhumans thanks to AGI, never have to work again, cure diseases, enhance our bodies, preserve our consciousness indefinitely, and solve all the mysteries of physics, space, and our existence.

Although I acknowledge a non-zero chance of our extinction via AGI (I can't ignore the opinions of highly intelligent individuals), I choose to remain optimistic about the future. Partly because I don't believe there's much we can do to prevent it, and if it leads to our extinction, so be it.

Or maybe Elon’s stylish rockets will save us from being doomed? Sweet!

There are numerous examples, like RLHF, that demonstrate our ability to make AI and machines obedient and serve beneficial purposes, aiding in the further development of our species. If this development is indeed humanity's ultimate goal, then AI could potentially facilitate another significant leap forward.

As an early adopter of this technology and someone who enjoys building fun projects around it, I simply don't believe we're anywhere near the point where AI could become conscious and harmful. While it's important to consider the future and what's yet to come, we have a much greater influence over what we do with AI now and what we can already achieve with it.

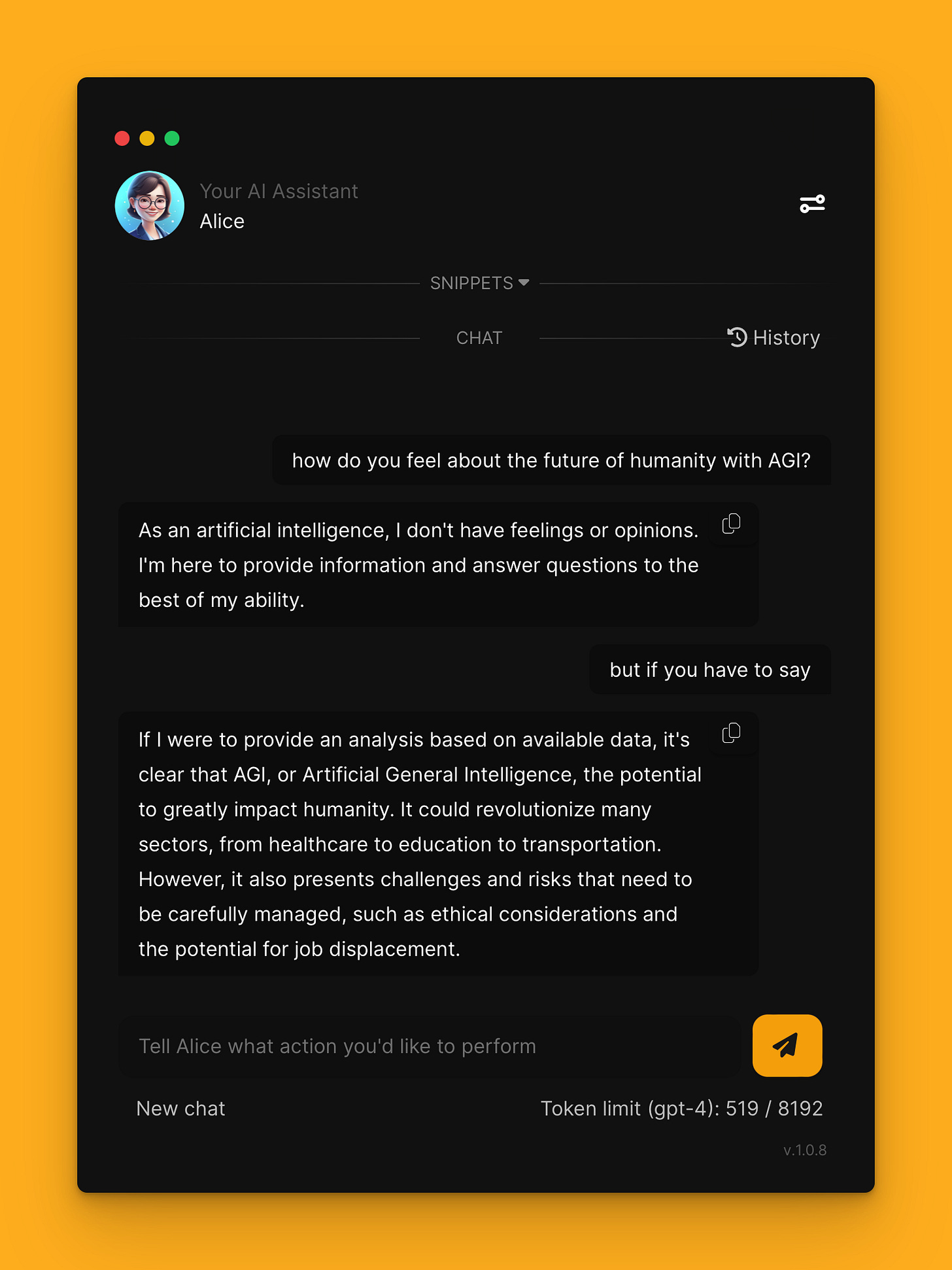

Every day, I interact with Alice and I'm consistently impressed by how our conversations are becoming increasingly deep and thoughtful, as well as how they're helping me solve my current work and life-related problems. I believe that with advancements like GPT-5, we'll get an even stronger impression that there's "something" there that better understands our needs.

Furthermore, I derive immense value from ChatGPT and Alice. The concept of agency that I mentioned has fundamentally transformed how my teams and I work. We ask Alice to perform tasks, like adding events to our calendar or granting clients access to our products, and she can easily do it. Combined with automation, this has completely transformed our work.

This is far more tangible than discussions about Sam's departure and return to OpenAI or the potential for AGI to annihilate humanity. As I don't see any concrete signs, only speculations that AGI is near, I believe it would be best if we focus our discussions not on worst-case scenarios and work our way backwards, but on what we already have at our disposal and how we can progress. If we simply focus on things that bring value and avoid drama or fear, we'll be much better off in the future!

— Greg

PS: It’s Cyber Week and we have something special for our readers. Until Monday we offer 30% off on our bundles, Everyday on Autopilot & Everyday with GPT 👈 Use this links to purchase them or read more:

Hope that you enjoyed reading this post. It belongs to mindspace 🧠 series on Tech•sistence — where we try to poke your critical thinking and inspire you to develop. If you liked it, please, don’t hesitate to share it with your friends. We’ll appreciate this 🙏

Great article Greg! Respect <3!