Will AI enable the ultimate voice interfaces?

In the shadow of past voice interface failures, a revolution is unfolding. AI-powered voice chatbots are now poised to drive substantial results and revolutionise various areas of our work and life.

One could say that the hype around AI has somewhat subsided. From the perspective of someone who focuses on the utility of AI and its practical application, this is not bad news at all.

Now is the time for building, during which the biggest projects will emerge, having a real impact on how artificial intelligence will support our work.

Personally, I can’t imagine working without Alice, which we built, but I am also aware that this is just the beginning, and currently, the most important infrastructural projects are being developed, which will drive a completely new kind of software. One could even say that the trend has somewhat turned around, considering the recent reviews by MKBHD regarding devices that have appeared on the market - Humane AI pin. The title of this review speaks for itself:

Despite criticism from the AI world and accusations of lack of responsibility, in my opinion, the reviews are justified, and indeed, this device currently can only be a cool gadget for the biggest AI fans, not a useful work tool.

Apparently, there’s another review of slightly better Rabbit R1, but still far from ideal and what it’s creator promised a while back. Although, this one might be a fun device to give your kids who are exploring world.

In both cases, however, the creators of these devices deliberately chose a path that is not a shortcut. Creating a separate device that specifically gives up some of the phone’s features, (like touch handling), to differentiate itself from it, or basing on a completely different approach to agency than API - all this makes it certain that a device like Rabbit R1 will have a much harder time initially breaking through to general use.

Our approach with Alice is the opposite, which allows for the use of API-based agents and practical application of the app now. However, in the long term, entirely new categories of software and infrastructure supporting multimodality differently than the typical API, which was created by the AI era and has its limitations, will probably emerge.

Do you remember the voice hype?

These devices remind me of one of the previous unfulfilled technological promises I wanted to write about. It’s about voice interfaces. Remember how they were supposed to revolutionize our work? The assumption - correct. The implementation, unfortunately, deviated from the standards that could have been considered acceptable.

Both Siri and Alexa, as well as Cortana, rather disappointed the hopes placed in them and are mainly used to issue sporadic commands to a smart home or Spotify, instead of offering the promised support for our productivity.

In practice, however, it turns out that under the radar of public opinion, it is precisely voice interfaces that have the best chance of first transforming into a tool that will truly start supporting many businesses. Infrastructural projects, such as Eleven Labs, are not without reason recording huge growth and astronomical valuations - the hopes placed in them are very high.

It is thanks to artificial intelligence that voice interfaces have a chance to finally fulfill the hopes placed in them. Please see how an AI-supported voice conversation can look like now:

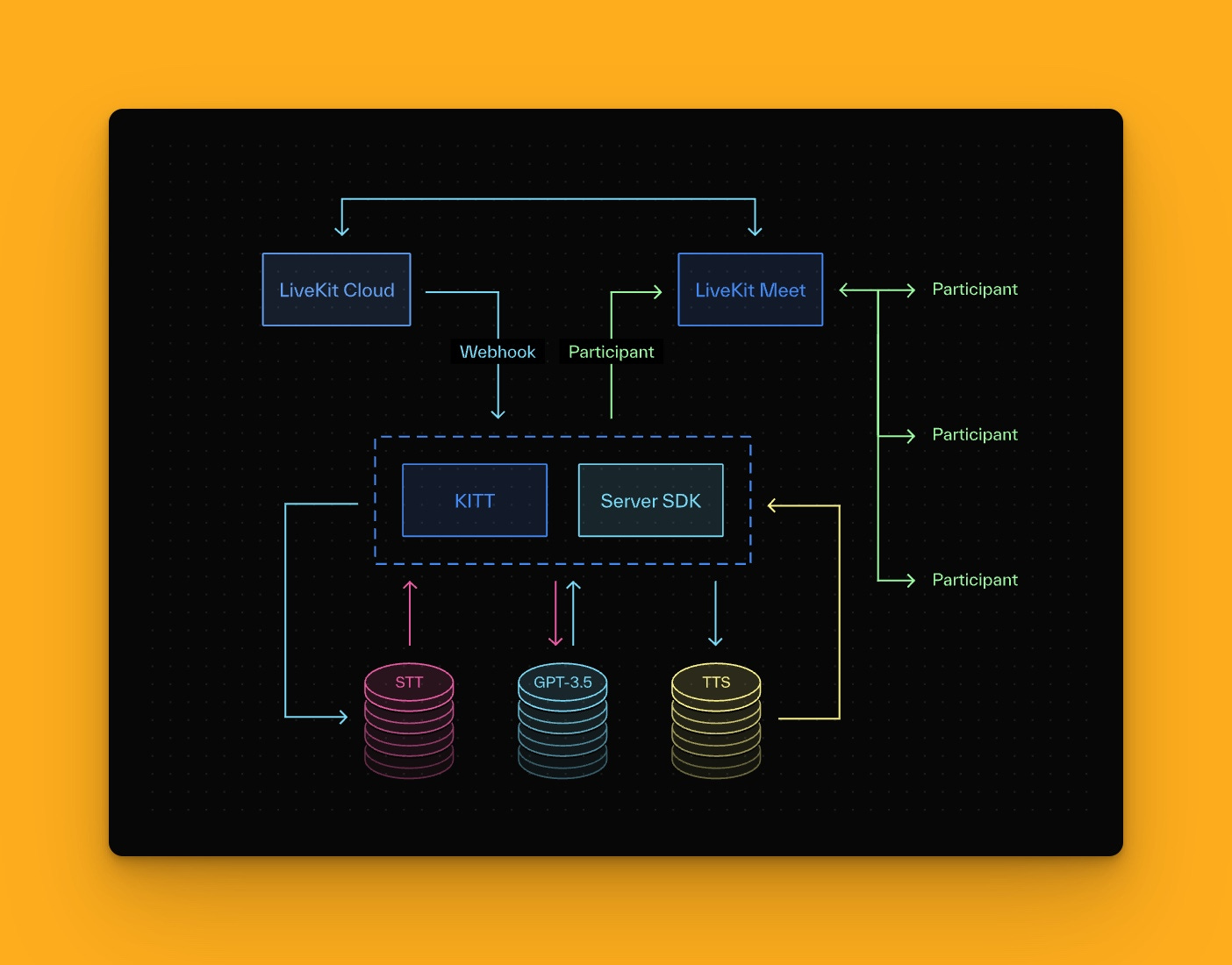

For voice interfaces to storm all booking systems, receptions, and other such interactions, several major technological changes are needed: better silence reading, faster response times (latency), a larger context window. Without going into details, most of these things have already been addressed. And if you want to read more on technical details of one of the approaches, including RAG to tackle latency issues, exploring and choosing the best model and building UI, I recommend reading this LiveKit blog post.

After all, it's well visible in the video above. However, beyond the technology itself, as usual, a change in mentality is necessary, as well as permission for experiments, which in the initial phase do not have to be successful.

Of course, there are use cases that can be classified as safer in case of a mistake and probably we should start with such cases. But is it really that easy to classify? For example, such an activity as booking a table in a restaurant seems to be a brilliant candidate for introducing AI + voice chatbot now.

On the other hand, what if the answer to the question about allergies is misinterpreted by AI? In extreme cases, such a mistake can have fatal consequences. On yet another hand, how many times can a person, answering phone calls on the run, make a mistake and who will this statistic favor? Without going into deep details, there is a simple solution to this problem. Just send a confirmation of such a reservation and its details in a message after the conversation and allow changes if something is not right. There is also a small problem with taking responsibility for the answers given by AI, an example of which is a conversation in which a chatbot sold a new car to a customer for $1.

There is still a certain mental barrier to overcome, based on our past experiences with chatbots which have not been particularly good. Poorly designed user experiences have led to frustration in the past, as we tried to get an answer to a simple question on some website, not to mention the phone spam, for instance, offering renewable energy sources. However, I believe that verbal communication is so fundamental and natural for us that once these barriers disappear, we will quickly switch to using these types of interfaces without dwelling on the past. In practice, they can prove to be extremely useful. I have been using this tool for scheduling meetings for some time in my teams, and I am sure that in some cases voice booking or automatic confirmations would be welcomed by customers.

A shoulder to cry on?

Beyond these types of applications, AI-assisted voice interfaces have potential in many industries and sectors - from sales to specialized services such as therapy. Of course, it’s hard to talk about a tool that would directly replace an experienced therapist, but it could certainly provide support. Check out this demo by Pieter Levels.

Interestingly, we are entering the realm of emotional intelligence here, which is another milestone in the development of AI-supported voice interfaces. In verbal communication, as humans, we have developed a multitude of subtle mechanisms that allow us to read intentions and context. It won't be that simple to replicate with AI, but it's worth knowing that considerable progress has been made in this field.

One organization that takes this topic seriously and whose research is worth reading in this context, if you're interested, is DARPA.

I really encourage you to try out this demo to see for yourself, what kid of progress we’re talking about.

I also recommend a few interesting companies that are developing infrastructure or voice interfaces in conjunction with AI:

Adding Voice Actions to Alice

As for us, we are increasingly experimenting with sound within the Alice application. Our goal is to create an assistant with whom you can have a normal conversation while working at the computer. In addition to answering questions, it will also enable the execution of actions.

You could say that we aim to create what Siri should have been from the beginning.

Currently, Alice is very proficient at creating text transcriptions and reading responses in a natural voice.

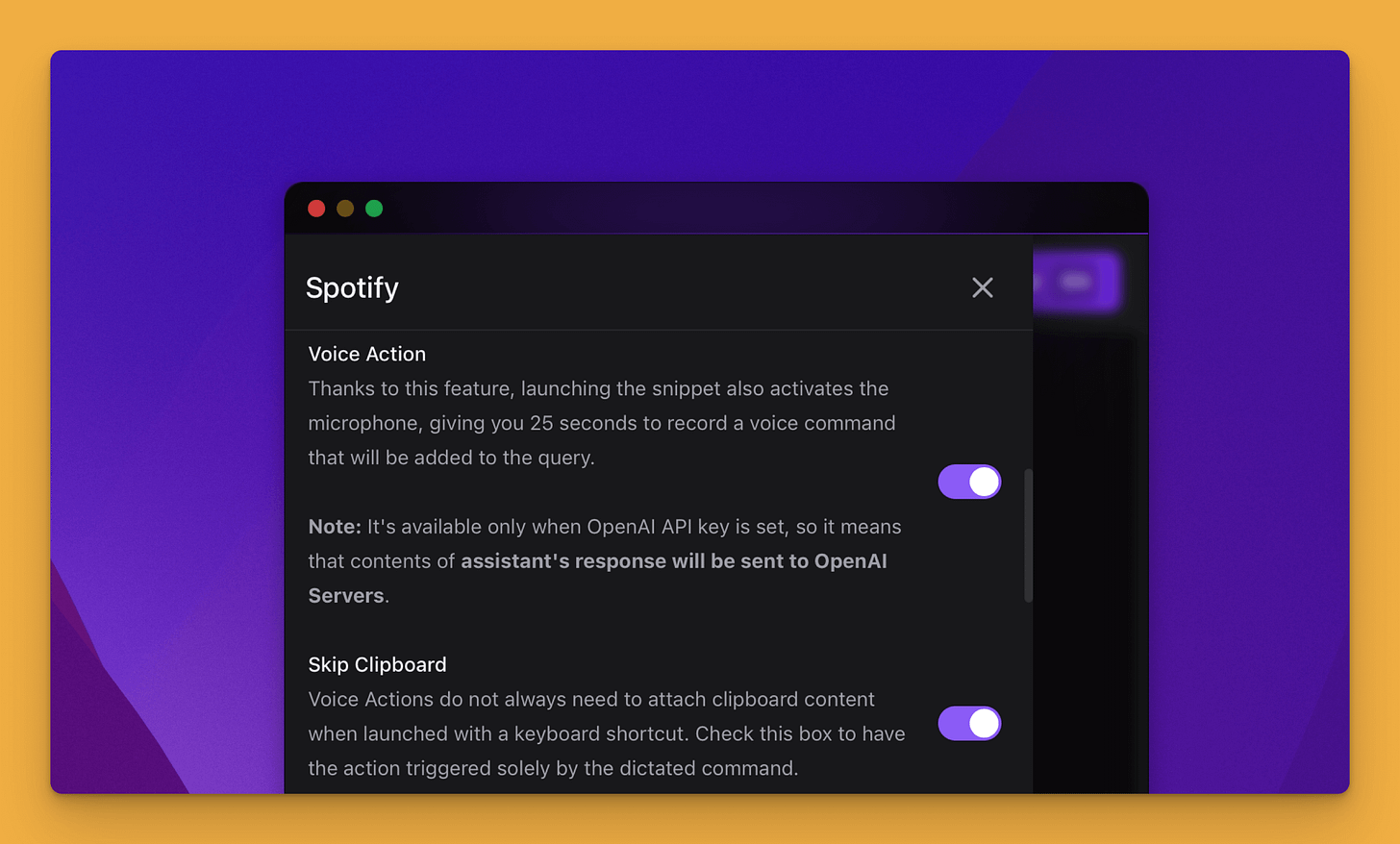

But this is just the beginning. We have just released an update that allows the creation of so-called Voice Actions, i.e., actions using voice commands, available via keyboard shortcut. What does this mean in practice? The Alice app can run in the background, and you can ask, for instance, for:

A summary of the selected content

The main action to be taken from the selected email

A description of what is on the screenshot taken

This way, you can start interacting with AI without having to summon the app, using natural language. Of course, we went even further with this, and since we’re talking about Voice Actions, Alice can also execute commands that you give her. Snippets containing voice actions can be linked to automations, making it possible to issue commands such as:

“Play Billie Eilish on Spotify”

“Save this to my notes”

“Check if I’m free at the proposed time”

And much more!

Voice actions in Alice are still in an early version, and depending on the audio configuration on your computer, the new feature may not always work perfectly. We will certainly continue to develop it in the future, and the possibilities in the field of technology will definitely increase.

If you don’t have Alice yet and want to download it in the latest version - check out heyalice.app. You can now purchase the app in a lifetime version and the basic version costs $99.