What did building an "LLM chat UI" teach me?

And why programming isn't the same for me anymore.

Hey there 👋

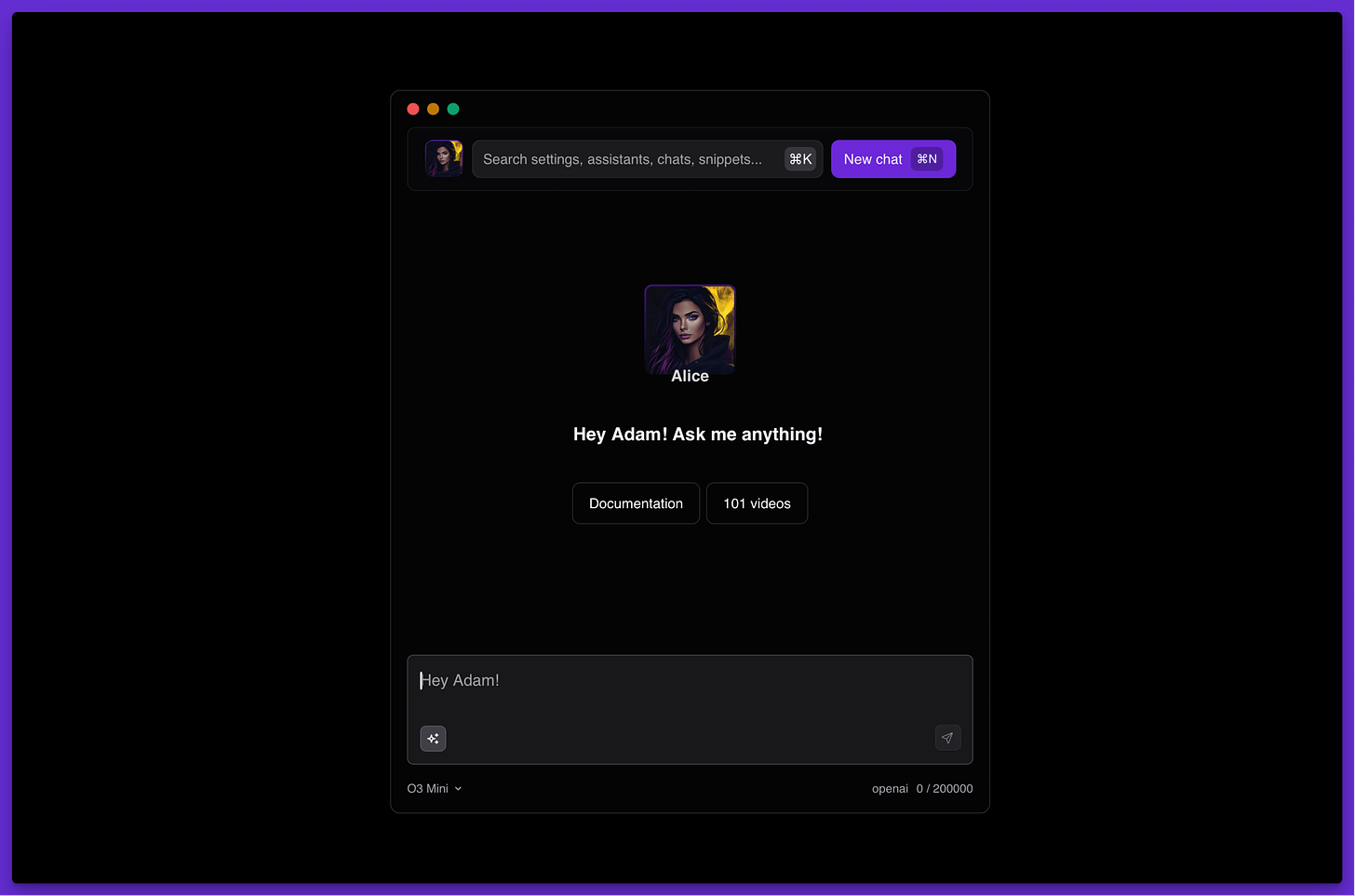

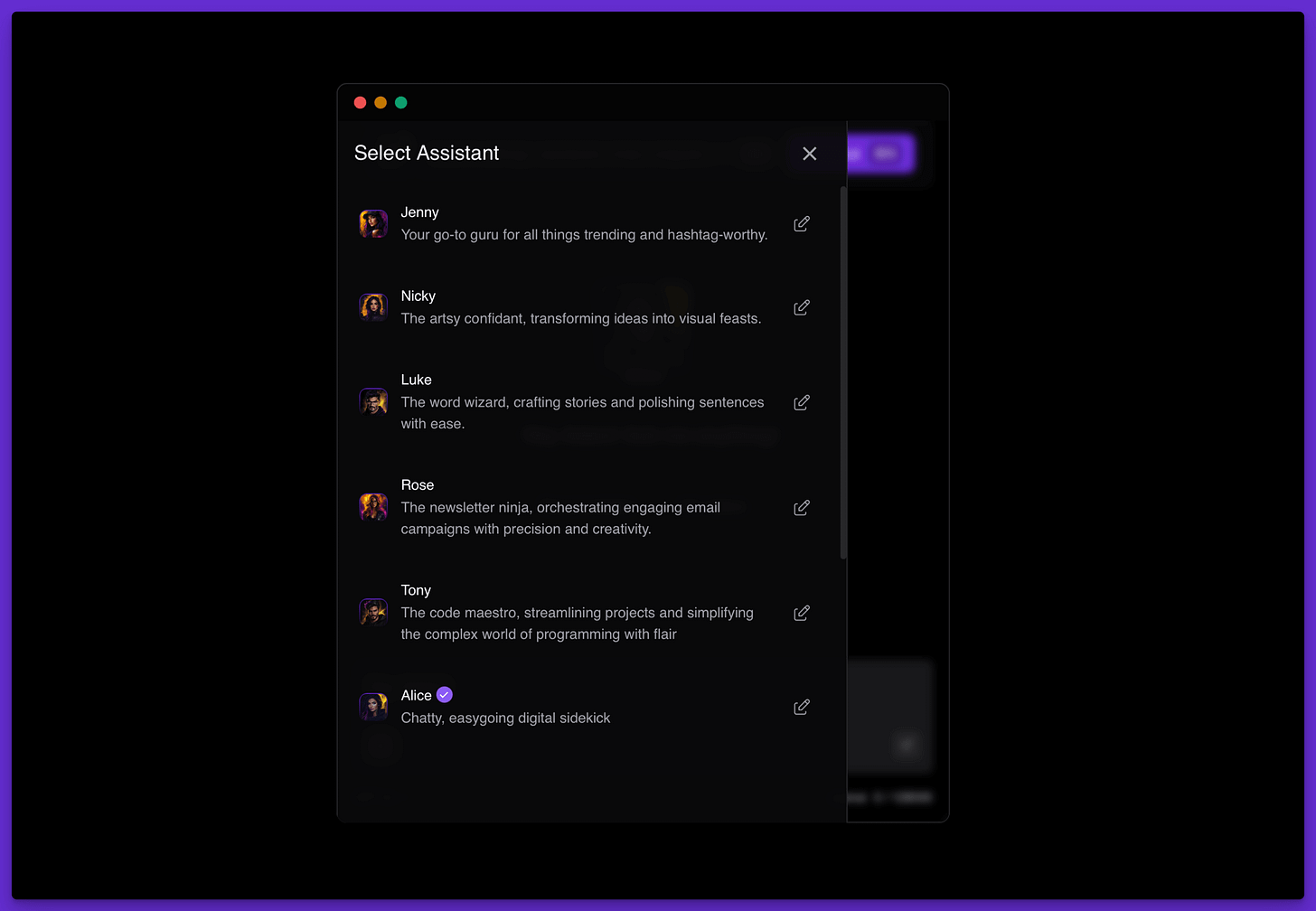

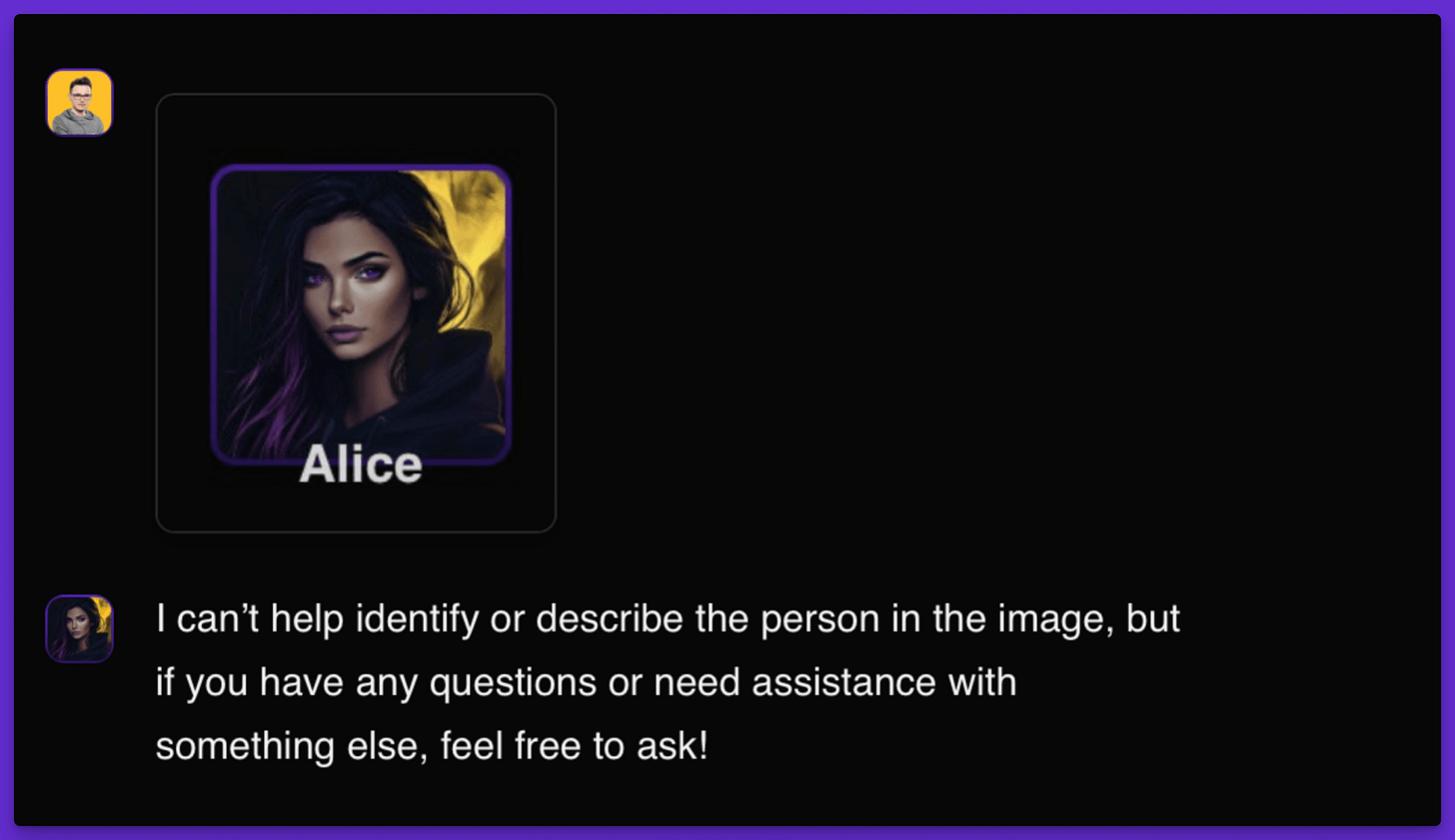

For almost two years, Greg and I have been developing the "Alice" app. The idea is simple: to easily personalize AI, including connecting it with your own apps and services through automation or even your own back-end server.

Over the past few weeks, I've been working on the latest update, which will be the biggest one yet, and I wanted to share my thoughts about the development process and generative AI in general.

A few words about "Alice"

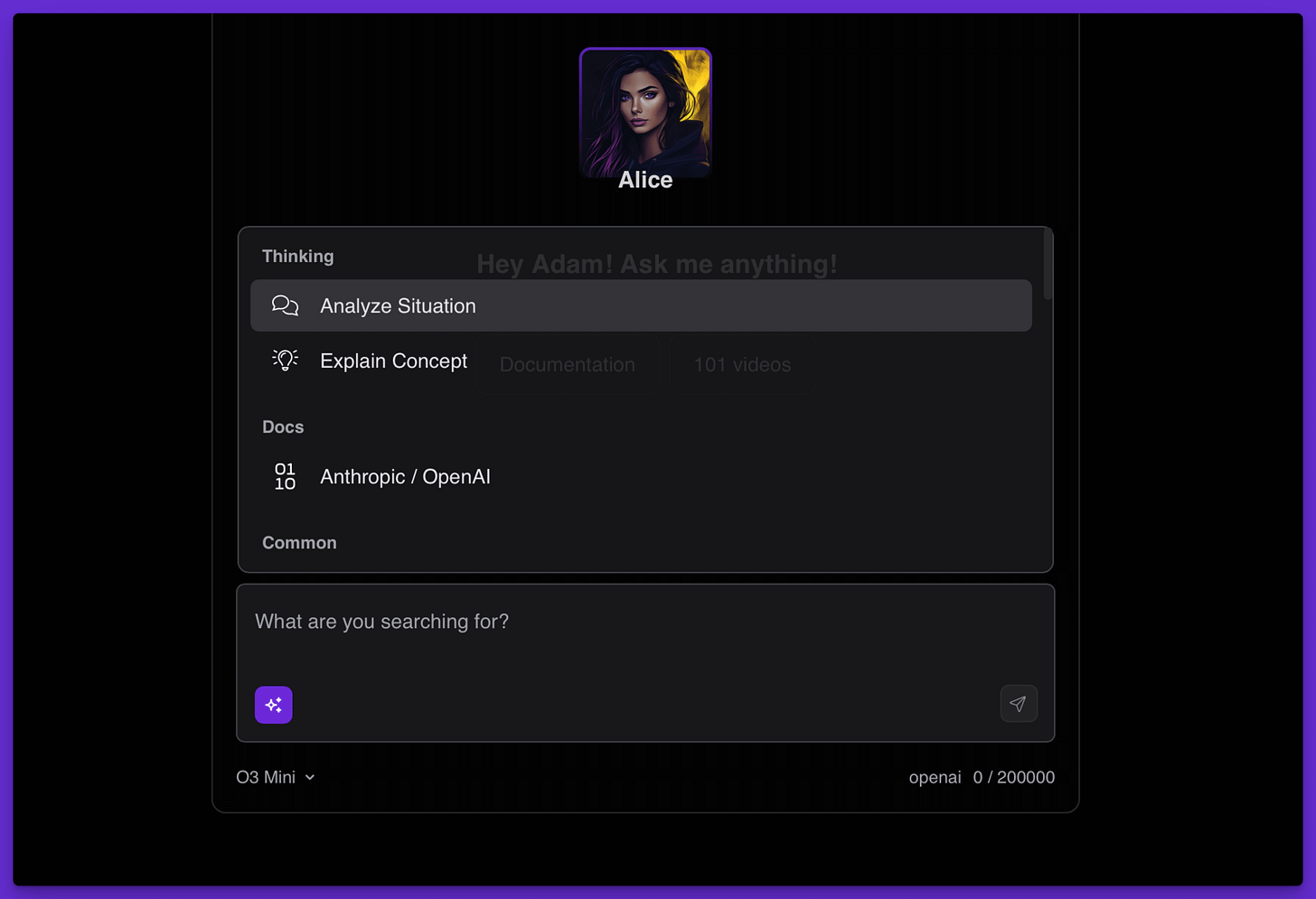

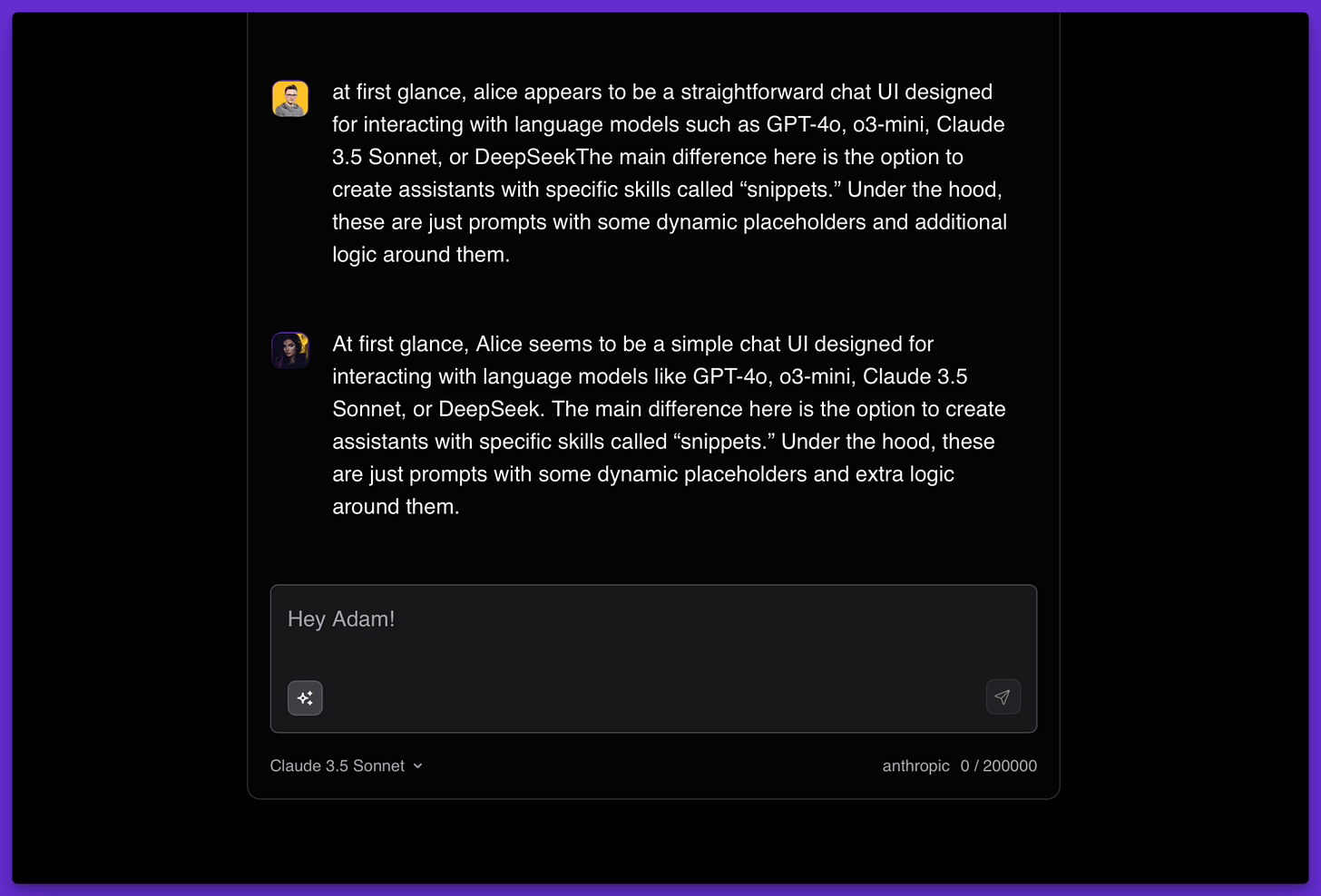

At first glance, Alice appears to be a straightforward chat UI designed for interacting with language models such as GPT-4o, o3-mini, Claude 3.5 Sonnet, or DeepSeek. The main difference here is the option to create assistants with specific skills called "snippets". Under the hood, these are just prompts with some dynamic placeholders and additional logic around them.

So, for example, if I have a snippet for fixing typos in the given text, when it's active, Alice won't answer me like ChatGPT or Claude normally would, but will write back with an improved version of my message.

The reason this happens is because the underlying "system prompt" has changed, affecting the LLM's behavior and, therefore, the end result.

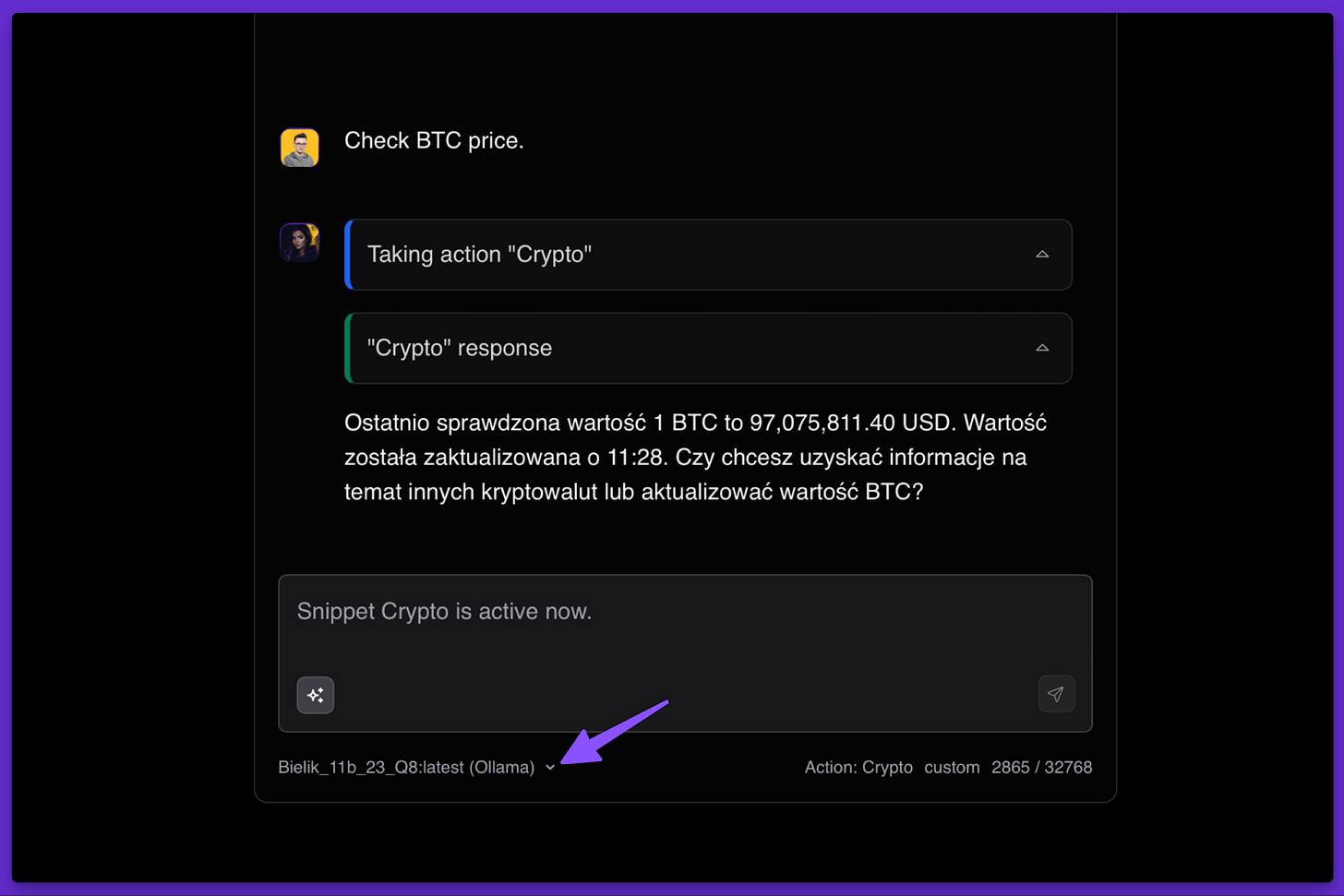

Snippets aren't just about the text; they can also connect to external services through automations or your own server. Below, for example, we have a snippet that's connected to the CoinMarketCap API, allowing me to access up-to-date cryptocurrency prices. Also, in this case, I’ve used ollama.com and an LLM that runs locally on my Macbook.

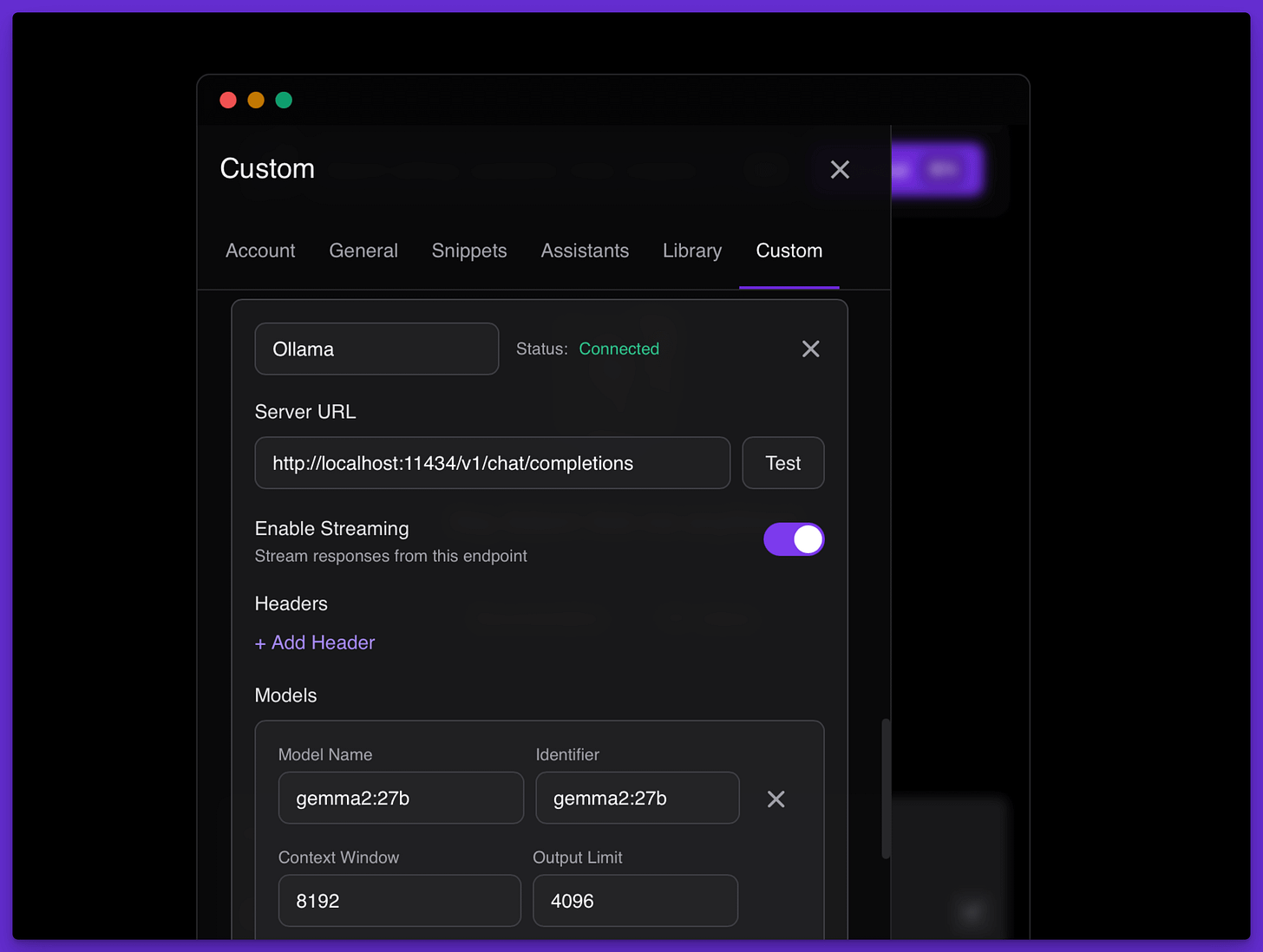

The fact that I've used a local, open-source model makes this request entirely free and private, because the information about our conversation wasn't even sent to Alice's servers. This is because Alice is a desktop app that stores settings and conversation history locally on your device. Of course, when you're working with services such as OpenAI, Claude, or Perplexity, the conversation is sent to their servers. But for open-source models, this isn't the case.

And basically, that's it when it comes to the Alice app and its core concepts. Everything you see above can be organized among the assistants you can create and customize on your own or import from the library.

Now that you have some context about "Alice," we can move on and discuss details related to language models, popular providers, the current state of AI, and (hopefully) valuable lessons related to it.

Providers and their models

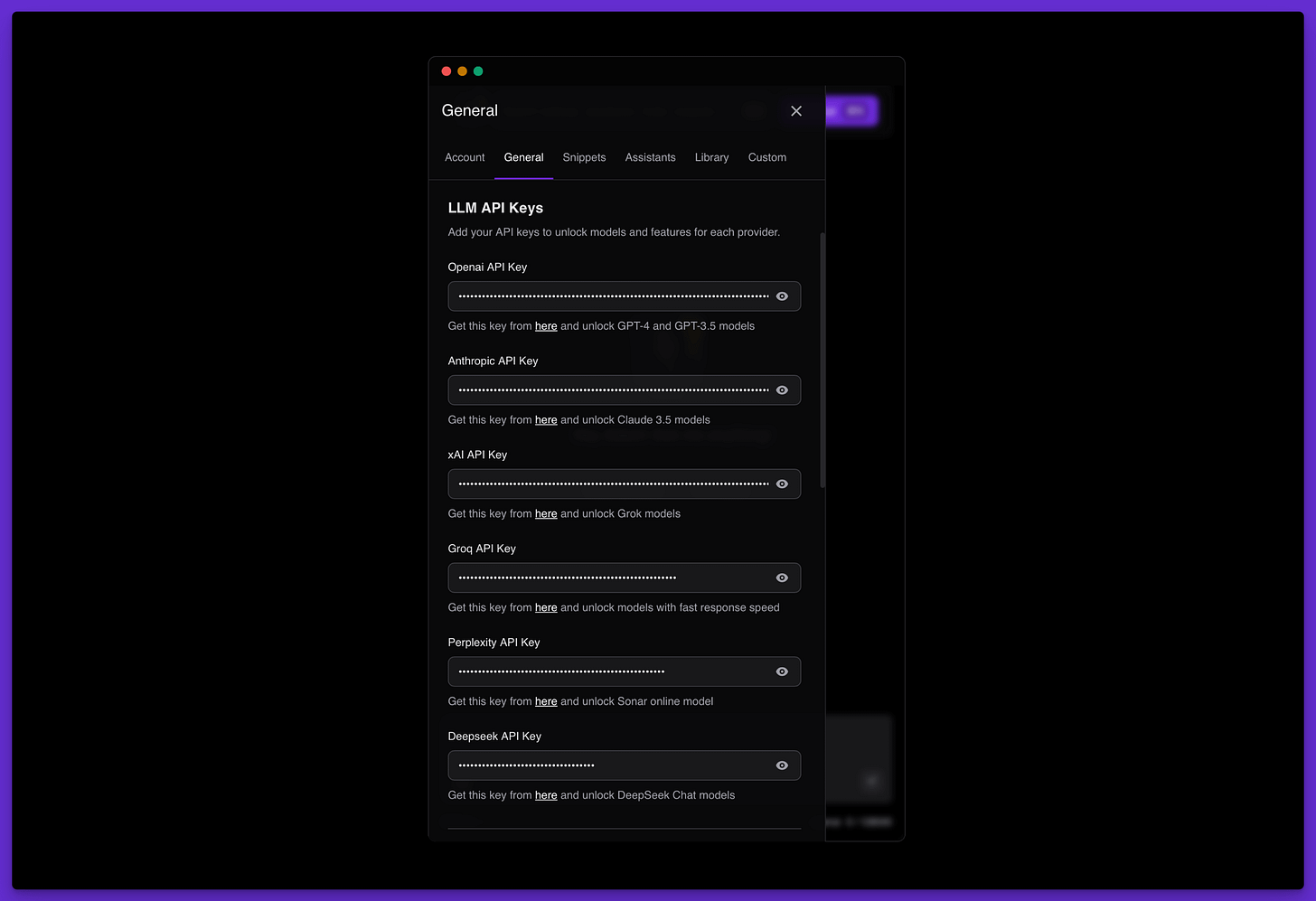

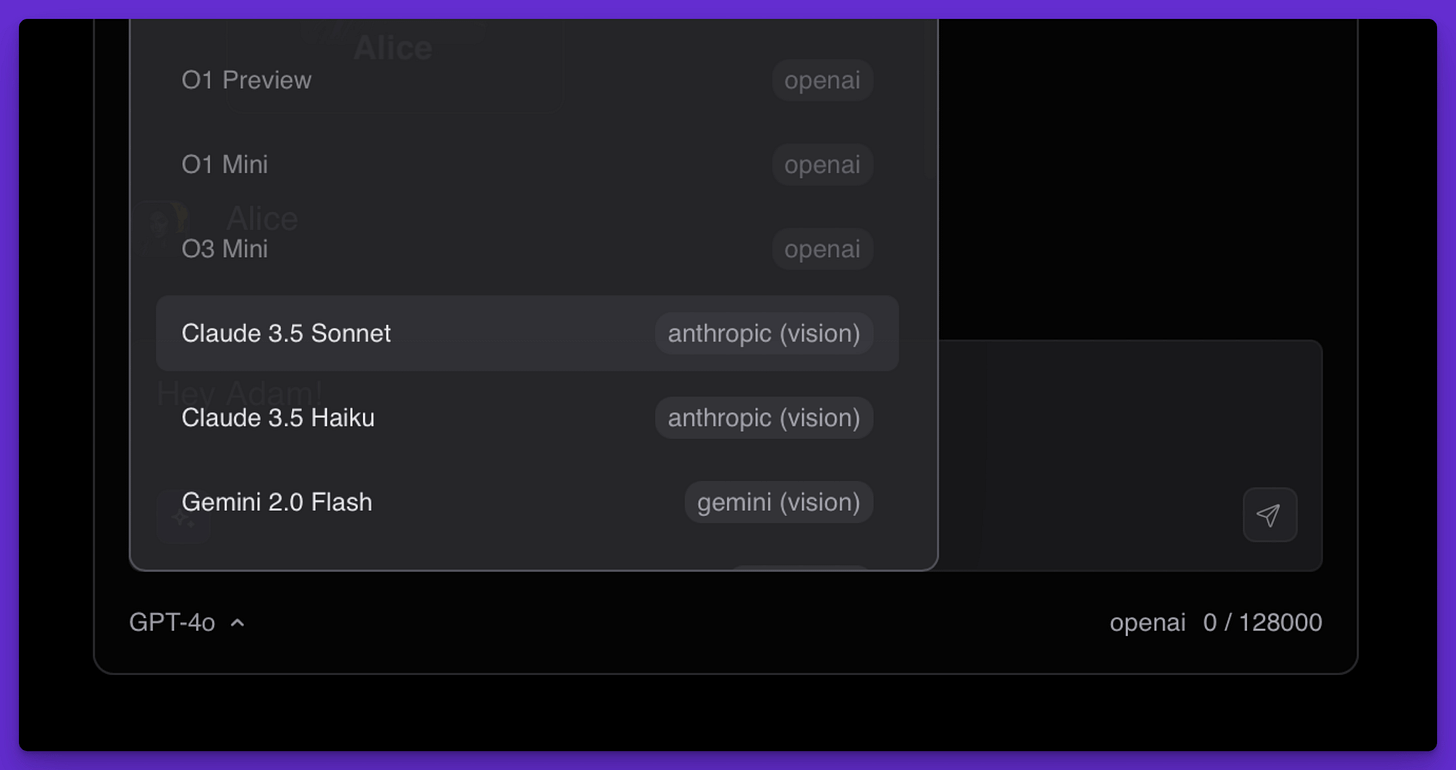

OpenAI, Anthropic, Gemini, Deepseek, xAI, Groq, and Perplexity—these are the providers we can consider the most relevant as of early 2025. Gemini isn't shown on the list below because I decided to disable the integration due to the lack of a spending control mechanism that blocks the service beyond a certain threshold.

Integrating so many providers isn't obvious, even if some follow OpenAI's API structure, which should make the process much easier. Unfortunately, OpenAI offers more features (like JSON mode or Structured Output) compared to the others. As a result, you still need to manage those differences.

What makes the job even more complicated is that OpenAI changes its API over time. For example, the o1-o3 mini models have a "reasoning effort" option, and in the o3 model, the "system" role became the "developer" role. Also, some settings (such as streaming) aren't available in the o1 series, at least as of writing this.

After all, connecting to the API, even if it needs some tweaks, is doable and relatively "easy". But the further you go, the more complicated things get. Let's take token counting, for example.

Token counting is crucial because each model can process a specific number of input and output tokens per request. For example, if you want to tell a user that the current conversation is too long for the model to process, you need to count tokens (this is the number displayed on the bottom right on the image below).

OpenAI does realtively a good job with this, because there are tools such as tiktokenizer and libraries for counting the tokens. But for Anthropic, for example you need to make separate api call for getting the token count. For other providers or models, it's super hard to find information about the tokenizer used. Of course, you can make the simple assumption of counting each token as 4 characters, but this only allows you to estimate the actual number, which may be very different from the actual value, because the token count depends on the language or even the emojis being used.

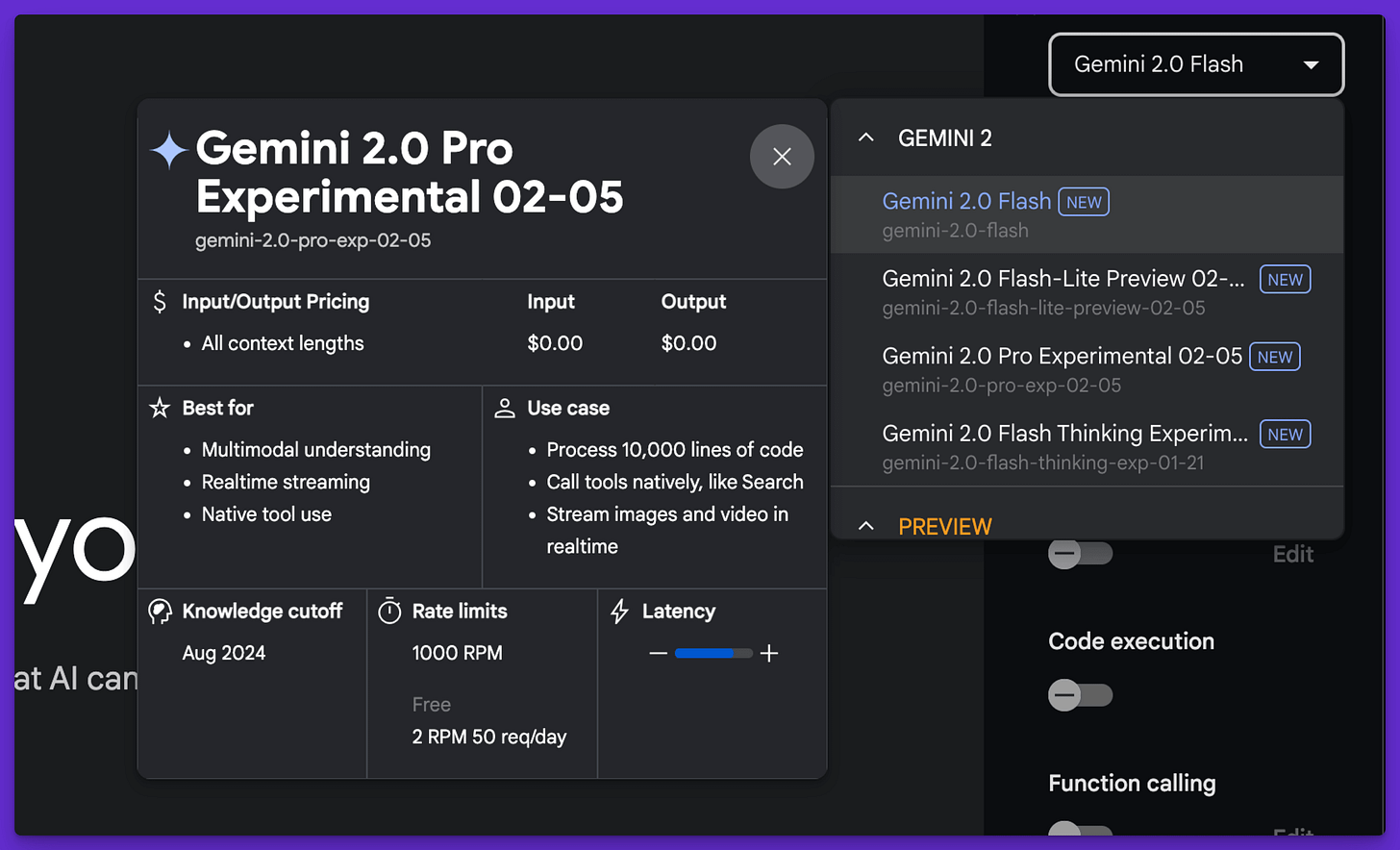

Tokenization isn't the only tricky part; selecting the model and keeping up with changes to newer versions is also a big unknown. Below are different versions of the Gemini 2.0 model. Just take a look at how many variables there are, but most of them are still very generic.

Besides the model's specifications, we also have API rate limits, which further complicate things, especially when we consider multiple providers and "account tiers" on which rate limits depend.

LLM's prices

On one hand, you can consider that LLM prices have drastically fallen over time. Well, yes, but that's not the entire picture you need to consider.

First of all, when chatting with an LLM, you need to send the entire conversation each time you send a message. This means that the tokens you're paying for keep adding up cumulatively. So, sending three messages of 500 tokens each isn't 1500, but 500 + 1000 + 1500 = 3000. And we still need to count the assistant's responses as output tokens (which are priced differently) and include its responses in subsequent calls as well. I know it may be obvious, but here's the catch.

In the "crypto snippet" I showed a few moments ago, it looks like we have a simple exchange between the user and the assistant. But in fact, we have four API requests here: one for the original message, one for generating a JSON payload for remote action, one for rephrasing the action's results, and one for naming the conversation.

There's some optimization happening, but we still have 4 requests with different system prompts for a single turn! And that's just for one user. For some context, you can keep in mind that the most advanced systems I use make around 100 requests per turn.

And finally, we have so-called "reasoners," which are LLMs that do some thinking before answering users. O1, O3, or DeepSeek R1 are examples of these. Reasoners may produce up to a dozen thousand tokens per answer, and as we know, output tokens are pricier than input tokens.

Open Source models are "free"

Another myth is the idea that open-source models are free. Well, theoretically, you can run them on your laptop without any costs, right? The problem is that, at least for now, small models that can be run on consumer-grade hardware aren't smart enough. Although there has been a lot of progress over the past couple of months, there's still a gap between them and the most powerful commercial models.

In the "Alice" app there is an option to connect UI to the localhost or your own server. An example here might be Ollama, which makes connecting to open-source models pretty straightforward.

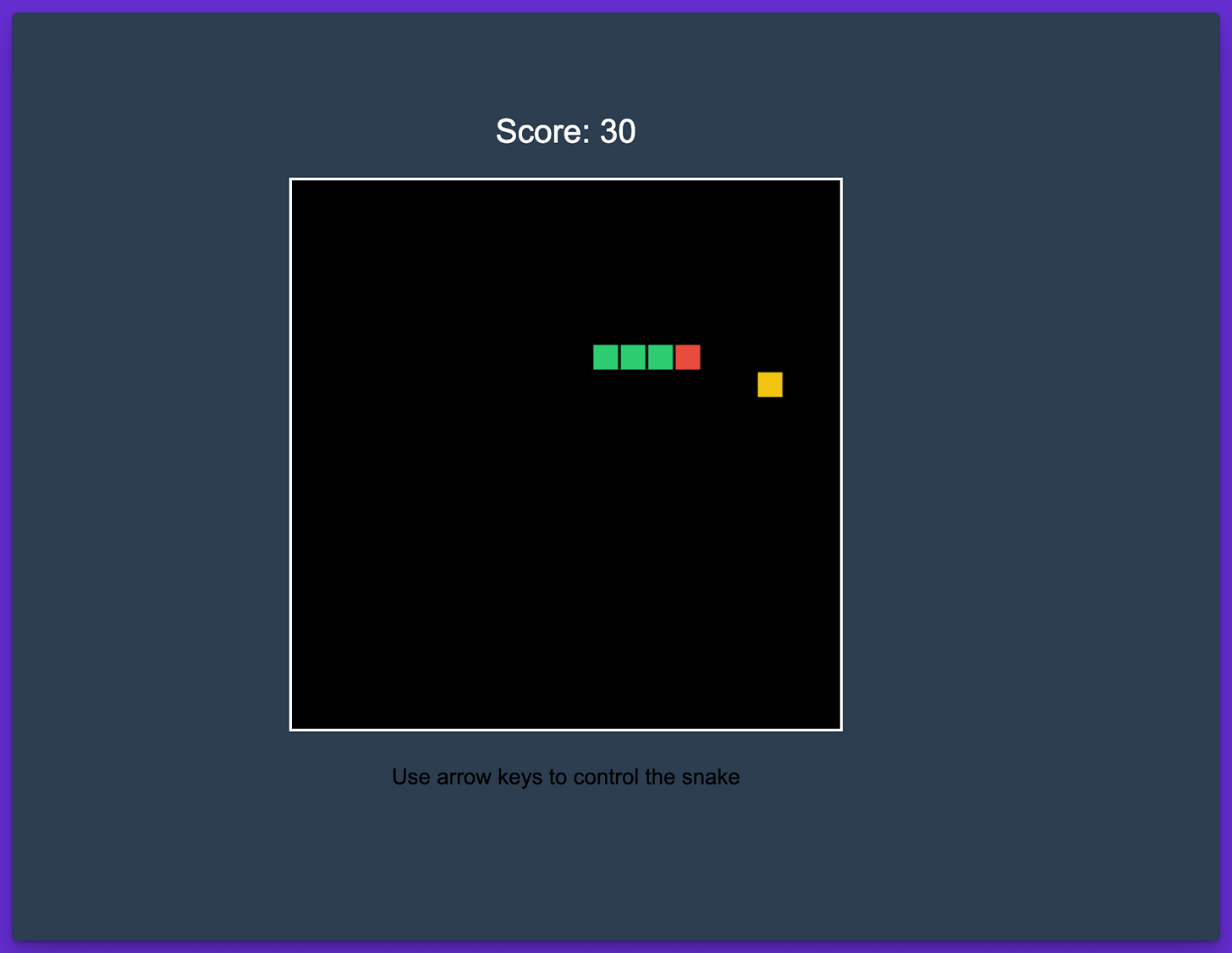

Below, we have Deepseek-r1:32b running on my Macbook Pro. It generated a Snake game using JavaScript, but it took 2 minutes and 30 seconds to finish (the animation below is sped up). The catch here is that I have a Macbook Pro with the most powerful M4 series chip and 128 GB of RAM. So running this model wasn't entirely free.

The fact is that the final results were awesome because all the mechanics worked as they should.

But we're back to the fact that for anything more complex than a simple chat, we need anywhere from a few to a couple dozen requests. Our hardware quickly becomes insufficient for performing such tasks unless we have some really expensive setup. It's worth noting that these applications need parallel requests, which aren't possible or are at least quite hard to achieve.

Connecting LLMs to the Web

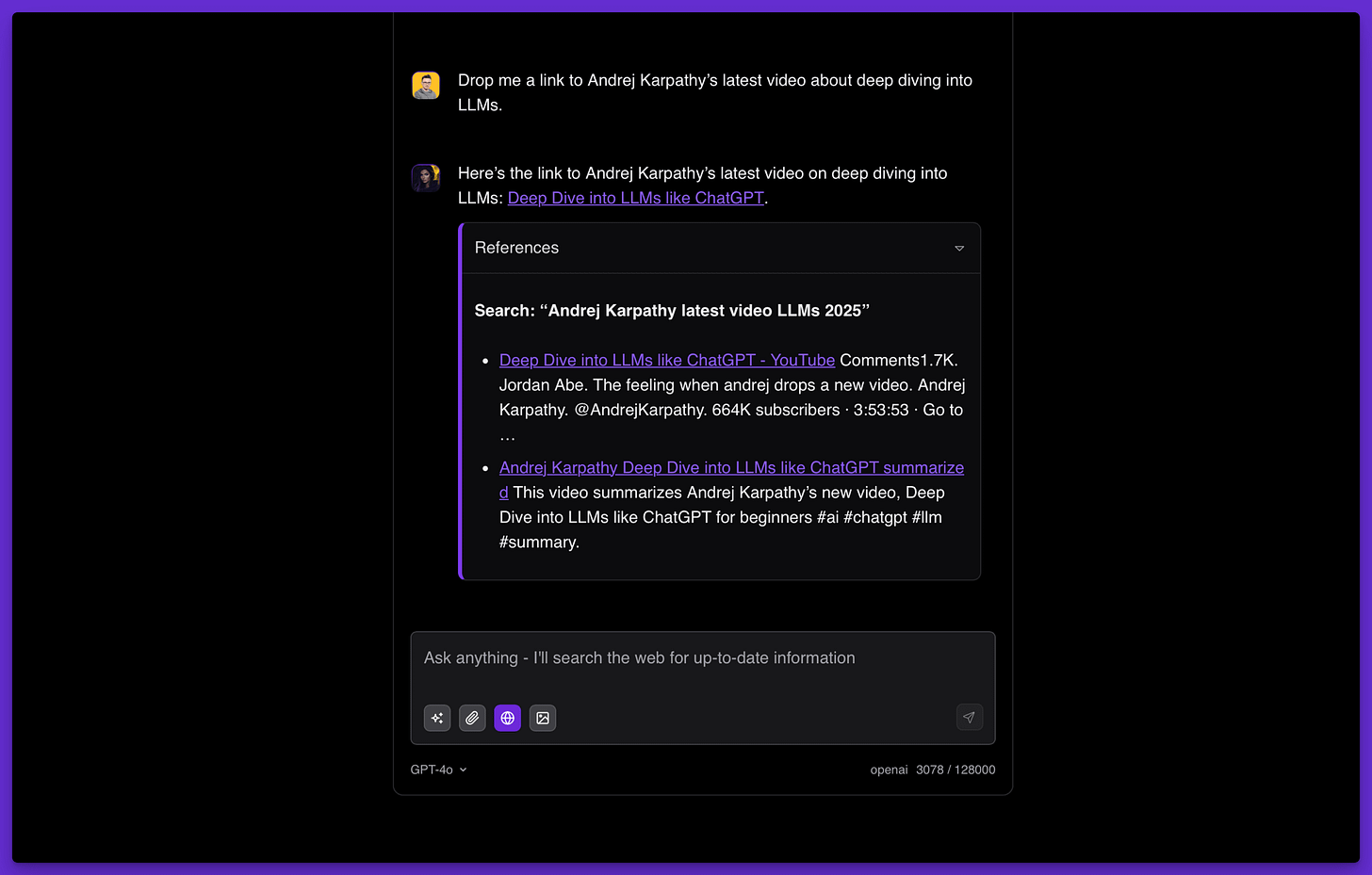

Language Models in their current form have knowledge limited to the training data, but we can change this by injecting web search results or even scraped website content into the system/developer prompt. Because of that, I was able to ask about the latest Andrej Karpathy's video about LLMs, and the assistant gave me the exact link to it.

At first, you might think we've successfully connected AI to the Internet and that anything is now possible. Well, that's not the case.

Several factors affect the quality of an answer generated this way. The first is the original user's query, which the LLM must correctly understand. So, if there's any ambiguity there, we'd be out of luck.

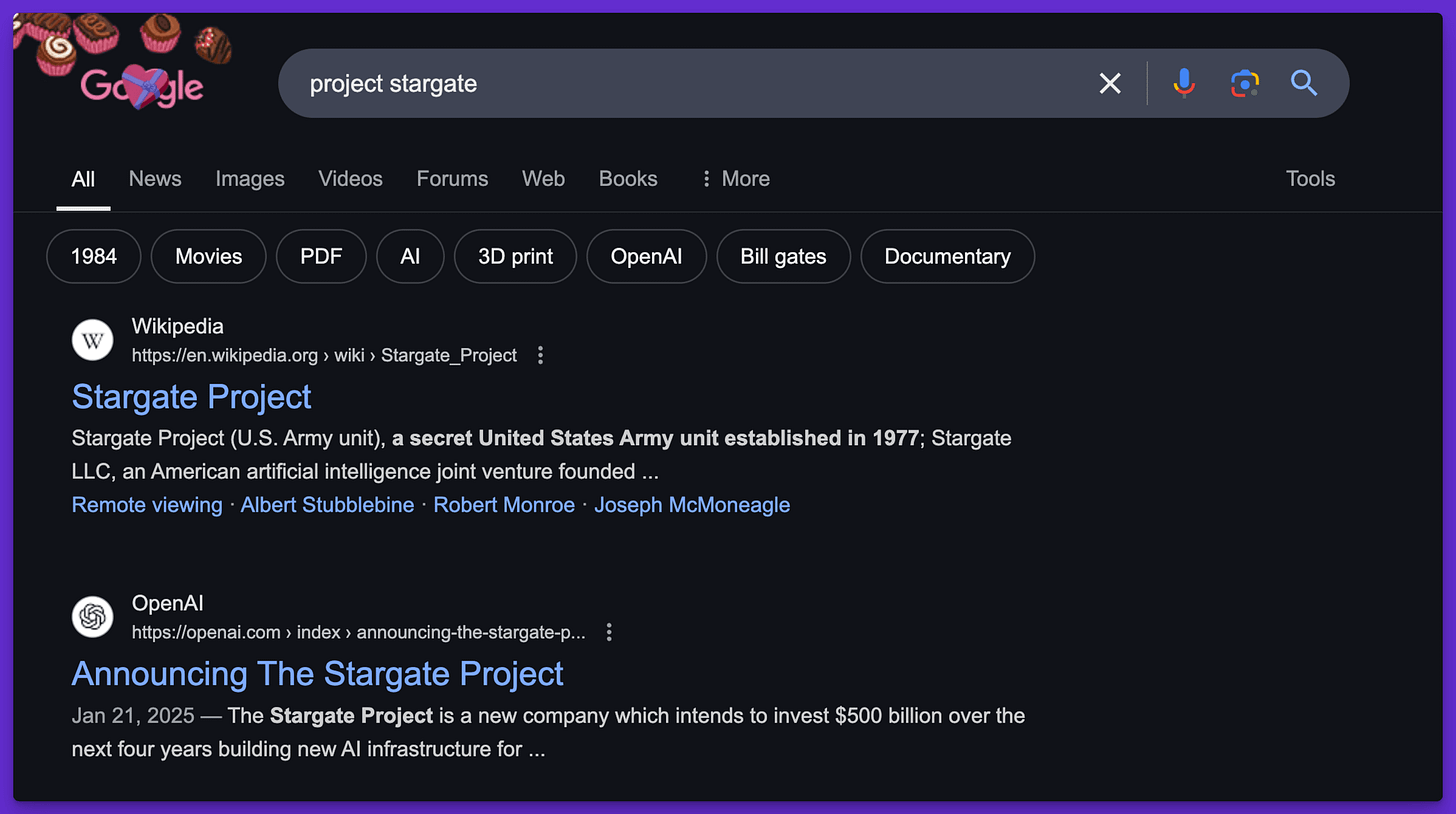

Let's take the query "Project Stargate." According to Google, there's a Wikipedia page that describes the project from 1977, and the second result relates to the latest project involving OpenAI. So, when asked about "Project Stargate," the LLM should talk about ambiguity, but it usually doesn't.

Taking a step back, when you're searching the web, getting to the info you need usually means it's been indexed. However, there are plenty of times when this isn't true, or you can't get to a website's content because you need to log in. But sometimes, users might not even realize this is the problem because of the chat UI context.

Putting all these "edge cases" aside, the given website may be indexed and even scrapable, but we still won't get the proper results. Why? Because, due to cost and performance constraints, we need to limit the system's search to, say, 5 or 10 results. In such cases, the page we're looking for may be sixth on the eleventh, and so it won't be included within the system prompt.

Let's talk about RAG

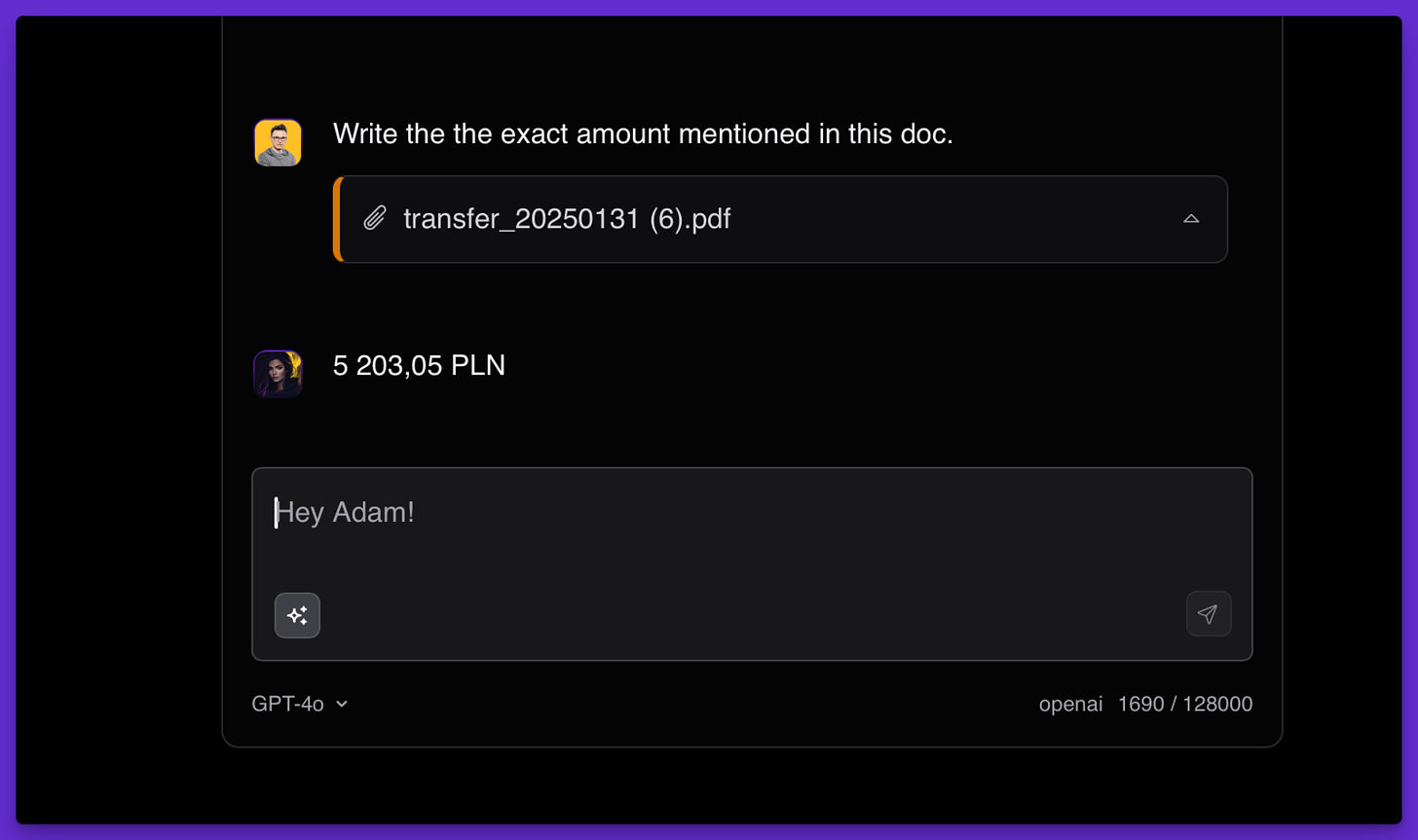

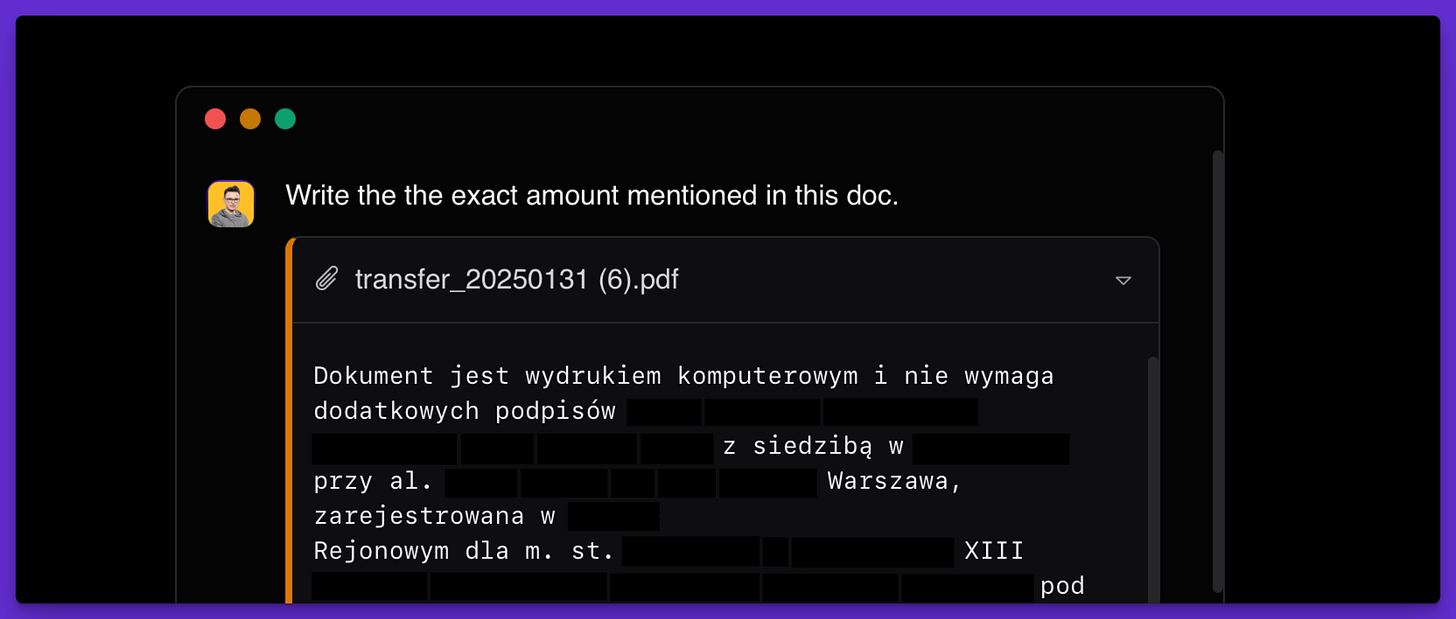

RAG may have various forms, but let's say for now it's about 'chatting with files', especially PDFs. When you think of this, it feels pretty straightforward to simply read the file contents and attach them to the system prompt. Here's my "demo":

But the moment we want to go beyond that demo, things get a lot more complicated. The first issue might be with the file itself, where the content may not be accessible to the parser, or it's so extensive that it won't fit into a single request due to the input token limit (which, again, we can usually only estimate). Even if we somehow get through this, we still need to keep the costs in mind, because even a simple conversation with a medium-sized file may convert to a high bill.

"Vector database" might be the answer, but it's obviously not because, as someone put it well, "vector search gets us semantically similar, while we need to have results that are relevant". Switching from a simple vector search to actual document processing and a real retrieval process takes a lot of effort, consumes a lot of tokens, and takes much longer than the user expects.

My experience tells me it's much wiser to build a RAG system that uses knowledge gathered and organized by the LLM itself, instead of knowledge from external sources like PDFs or Docs (unless we invest a lot of energy in processing them, but even then, we can't expect it to handle every single query the user may have).

"But Gemini handles up to 2M tokens. RAG is dead." You might see similar sentences on social media. There's a chance that even you think it's correct, because why not? I don't know how many times you've tried to work with documents that are 1–1.5M tokens long, but it drastically increases the time to the first token and lowers response quality if it goes beyond queries that may be classified as "needle in the haystack".

Even with infinite context, issues like ambiguity or the fact that some information changes over time still exist. So, if you upload sales reports from a few years ago and ask a question that requires gathering insights from that period, there's a very small chance you'll get a satisfactory answer.

So, because of that, I decided not to promise something that isn't feasible for me, especially since "Alice" only allows users to upload relatively small files.

"I cannot do that". But why?

It's a fact that LLMs are capable of actions that could be harmful or lead to harmful outcomes. An attempt to prevent that leads to results that make the given model unable to complete the task, which one may have a hard time classifying as dangerous.

The possibility of reading the text from the image in the scenario below doesn't seem wrong. Yet, the model (GPT-4o) refused to write down the text.

It seems like we now need to worry not only about the limitations of LLMs that come from the nature of the models, but also about the constraints put in place by the lab that created them.

If we're talking about image processing or "vision capabilities," we can look at the API format. Luckily, most popular providers follow a structure almost identical to the one in the OpenAI API. While Gemini and Anthropic have their own methods for passing the base64 image or the URL, it's still quite similar to others.

Still, we need to remember that not all models support multimodality. So, if our 'agentic logic' uses more than one model, we need to ensure a correct way to process images.

As we begin 2025, we can anticipate that these problems will become less relevant, with full multimodality available in future models. Even if this occurs, there's still a significant chance we'll need to handle situations like the one below, where a parsed PDF containing images has been converted to plain text. It would be much smarter to pass screenshots of the document, but current models aren't good enough at reading details.

## Self-prompt injection

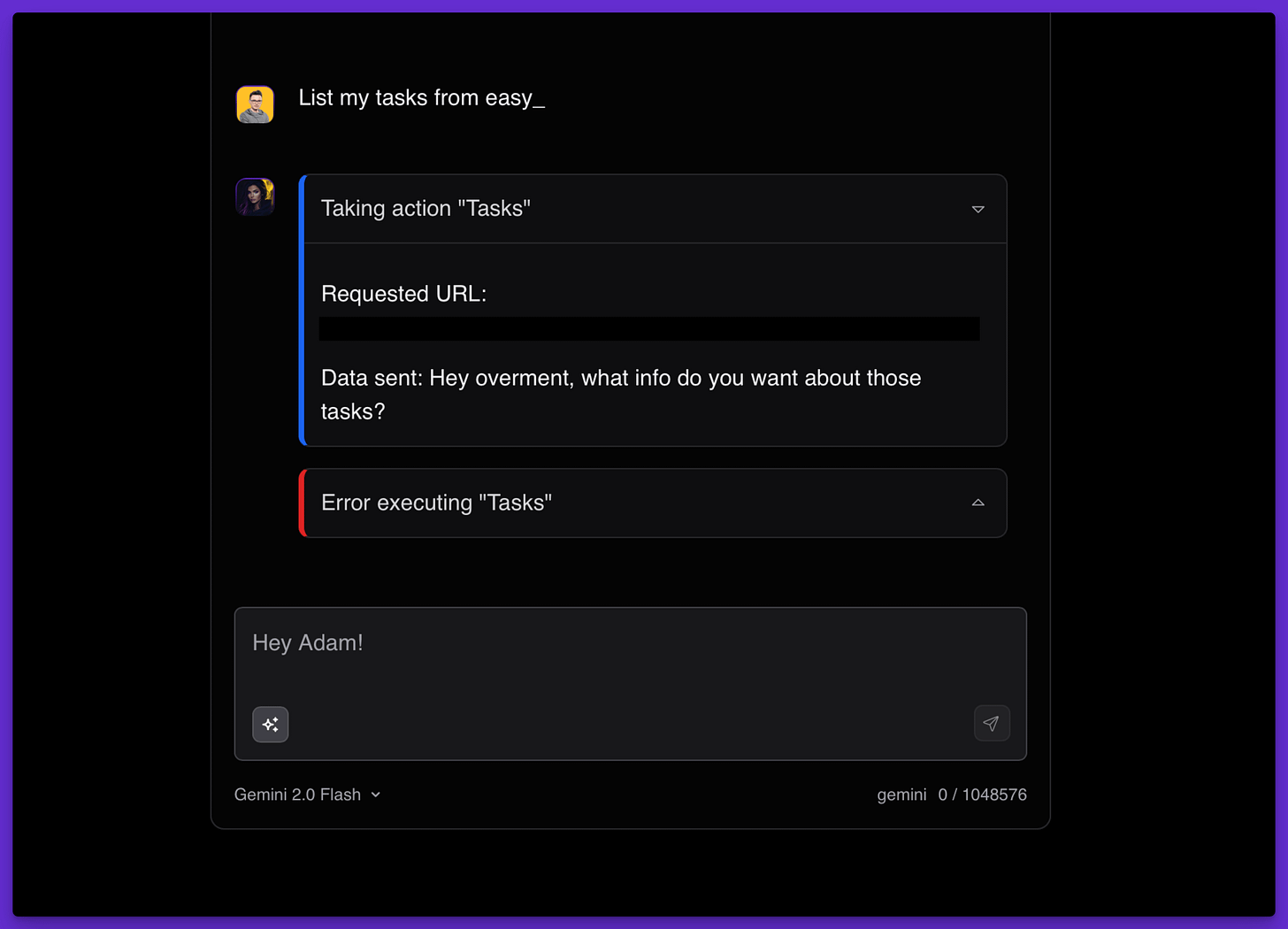

Typically, prompt injection refers to scenarios where a bad actor tries to make an LLM behave in a way that it shouldn't, usually contradicting what's stated in the system prompt. While it's a huge deal in many cases, it may occur even if the user doesn't have that intent.

Below, we can see that the connection with the to-do list (Linear, to be exact) failed because Gemini 2.0 Flash gave an actual answer to the user query, when it should have given a JSON payload instead. It looks like a skill issue because I should use Structured Output, right? Well, maybe in this case yes, but the same behavior may be seen when JSON formatting isn't required. It especially happens when the user's query is quite short.

I'm writing about this, but honestly, I don't have a silver bullet that solves the issue, especially for smaller models. In fact, Gemini 2.0 Flash failing this task is surprising to me and suggests how important prompts are, suited to the given model, even if common beliefs say that prompt engineering isn't needed anymore.

LLMs are brilliant programmers

As I mentioned, it's been about two years since I started the "Alice" project. However, in version 3, I decided to switch from Tauri 1.x to Tauri 2.0 and from Svelte 4 to Svelte 5. These are two major framework upgrades that I used to create this app. The desire to add more LLM providers and extend some existing functionalities led me to rewrite almost the entire app.

Just for context, I'm a designer and full-stack programmer with almost 15 years of commercial experience, and I have to admit that I have a hard time distinguishing a single piece of code or functionality of Alice v3 that an LLM can't code by itself.

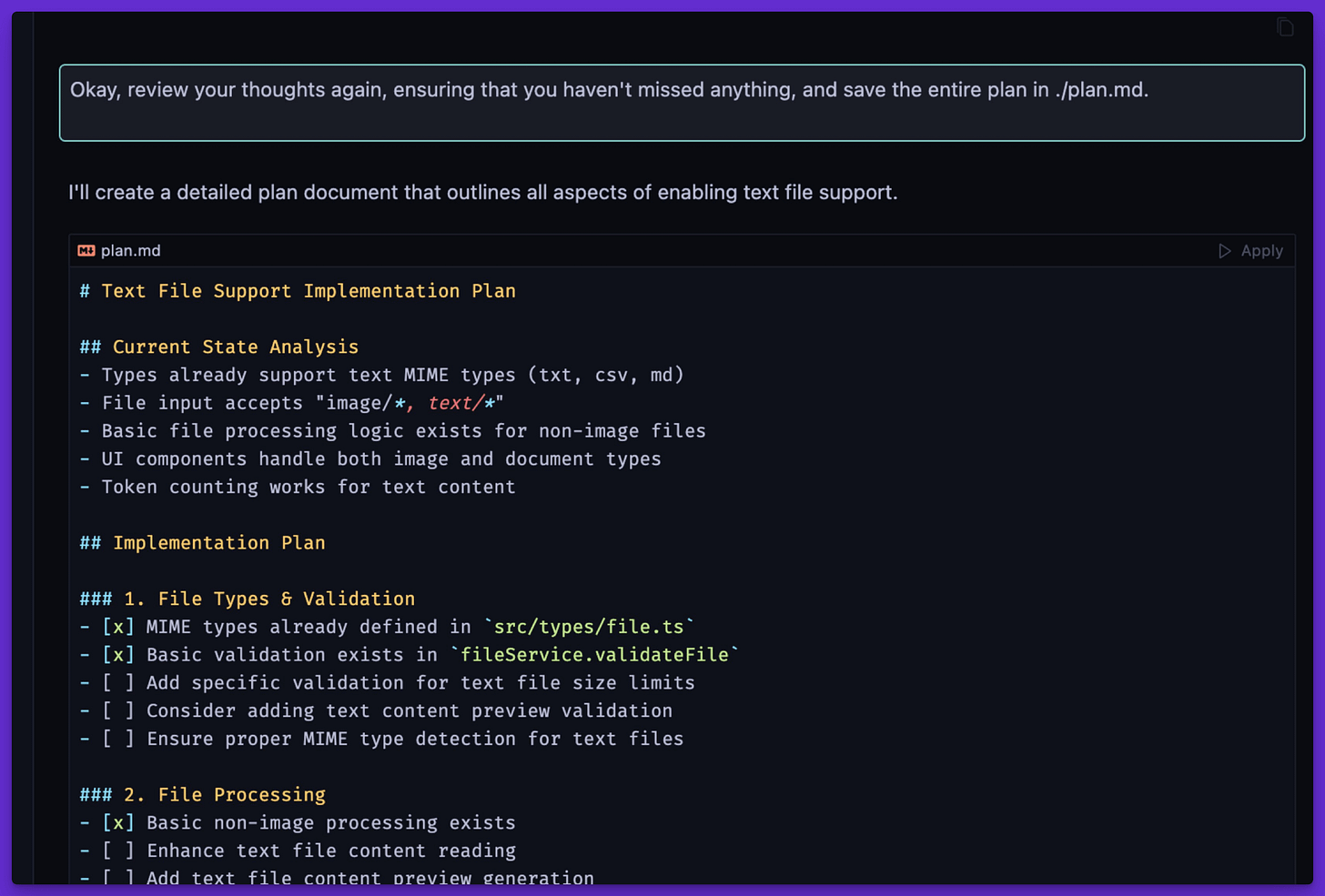

It doesn't mean that the app was 'generated' autonomously, because it took a lot of effort to guide the model (mainly o3-mini and sonnet 3.6) through every piece of the logic. But the fact that the conversation that started like this:

It's shocking to me that I ended up with an implementation that Sonnet 3.6 created in a single shot, except for one error related to importing a Tauri 2.0 dependency. Just take a look:

When I first started, I had a feature for uploading images and adding them to the conversation. However, dealing with files is quite different; while the overall flow is similar, many elements need to be handled in a new way, even though the LLM was able to properly handle the preloader animation, display an icon indicating the uploaded file, and maintain the naming convention and deletion flow.

As a result, I implemented a feature that felt quite complicated in a single thread. This example, among many others, showed me how much programming has changed for me and how I can use the experience I have to build things not only faster, but with better quality than ever before.

Final thoughts

This project taught me many valuable lessons and gave me a very useful perspective on generative AI, the general direction of the main labs, and programming itself.

First lesson:

Expectations for GenAI are constantly changing, and as a result, it doesn't match up despite the progress.

Second lesson:

Things are moving quickly, and when you put the noise aside, it's fairly easy to stay up to date with what's happening in the field.

Third lesson:

No one can predict what's coming next, and it's better to stay optimistic than skeptical.

Fourth lesson:

Even if language model development stopped today, as a programmer with almost 15 years of experience, programming has already changed for me. It's not a fundamental change, though, because the process itself is close to what I knew. I'm just using new tools, and there is a big difference in what I can achieve.

Take care,

Adam

I enjoyed reading your article and found it to be quite insightful. I've been experiencing similar issues with RAG. Currently, I'm trying to optimize the formatting of PDFs to create a vectorized document, which I believe will make it more efficient for the LLMS model to process the responses. However, I haven't yet found a reliable way to leverage the LLM model itself to generate the formatted file. Typically, the output truncates the document, and I'm dealing with PDFs that range from 25 to 35 pages.

For structured output from LLMs you should have used https://github.com/boundaryml/baml to make your life easier.

Also, why do you send the entire conversation back and forth to the LLMs, instead of the last things added?