Rethinking ChatGPT on macOS — Alice 2.0 UI reveal

For almost a year our teams and 300+ students of our programmes have been using Alice — native AI assistant app, that can run actions. Now, we're close to releasing Alice 2.0 with revamped UI.

The activities we perform every day, which are crucial for our productivity, boil down to communication. This could be a conversation with team members, or with an LLM through an interface like ChatGPT, or even obtaining information from resources on Stack Overflow or Perplexity.

Such communication includes the input data we provide, for example by asking a question in Google, and the returned result. However, this scenario is far from ideal productivity, as it assumes some friction both in getting an answer to the asked question (it is often inaccurate, or we have to look for it longer) and then in implementing the acquired answer into action in the form of performing the appropriate action.

The goal in striving for efficient task execution was precisely to reduce this friction, whether through better accessible and organized knowledge sources (in the form of documentation, Second Brain, digital gardens), or through automations that were actually able to perform previously defined tasks.

Our adventure with LLM started in earnest at the beginning of last year. That's when Adam, exploring an early version of the OpenAI API, came to a simple and brilliant conclusion:

AI does quite well in responding to a question posed in the JSON format, which we use in over 1000 of our automations.

Today, this is obvious to everyone, and extensions to models, GPTs in ChatGPT, and function calling use similar standards and assumptions. However, when we first discovered this, it was not common knowledge.

Until now, we thought that the automations we created from the very beginnings of tools like Zapier or make.com were almost the ultimate tool in the service of productivity. Thousands of robots helped us format content, manage website content, handle CRM, send emails, review complaints, correct invoices, and much more every day.

We instantly realized that what so far required carefully prepared input data, for example, splitting customer data into a table with email, name, surname, LLM is able to handle with such a query:

My dear, add a monthly subscription for John Doe.

Responding with love:

{ first-name: "John", last-name: "Doe", action: "add-subscription", period: 30 days}

And sending a request to our webhook address, which directed straight to the appropriate automation and launched it, performing the requested action.

However, we needed to prepare a tool that would allow us to easily refer to such actions and send queries. Of course, most of them would be ideal to launch with a keyboard shortcut and thus the concept of Alice was born - a native macOS application that would allow us to customize our way of interacting with artificial intelligence.

Why not ChatGPT? Unfortunately, ChatGPT, even today, although it offers a semblance of such capabilities (plugins, function calling, GPTs), does not do it in an optimal or effective way. Its use most often boils down to obtaining an answer, which then needs to be verified, and only later implemented. Actions, for example, those performed with the help of Zapier, can only be treated as a presentation of the concept and a curiosity, which is far from production applications and a real impact on our productivity.

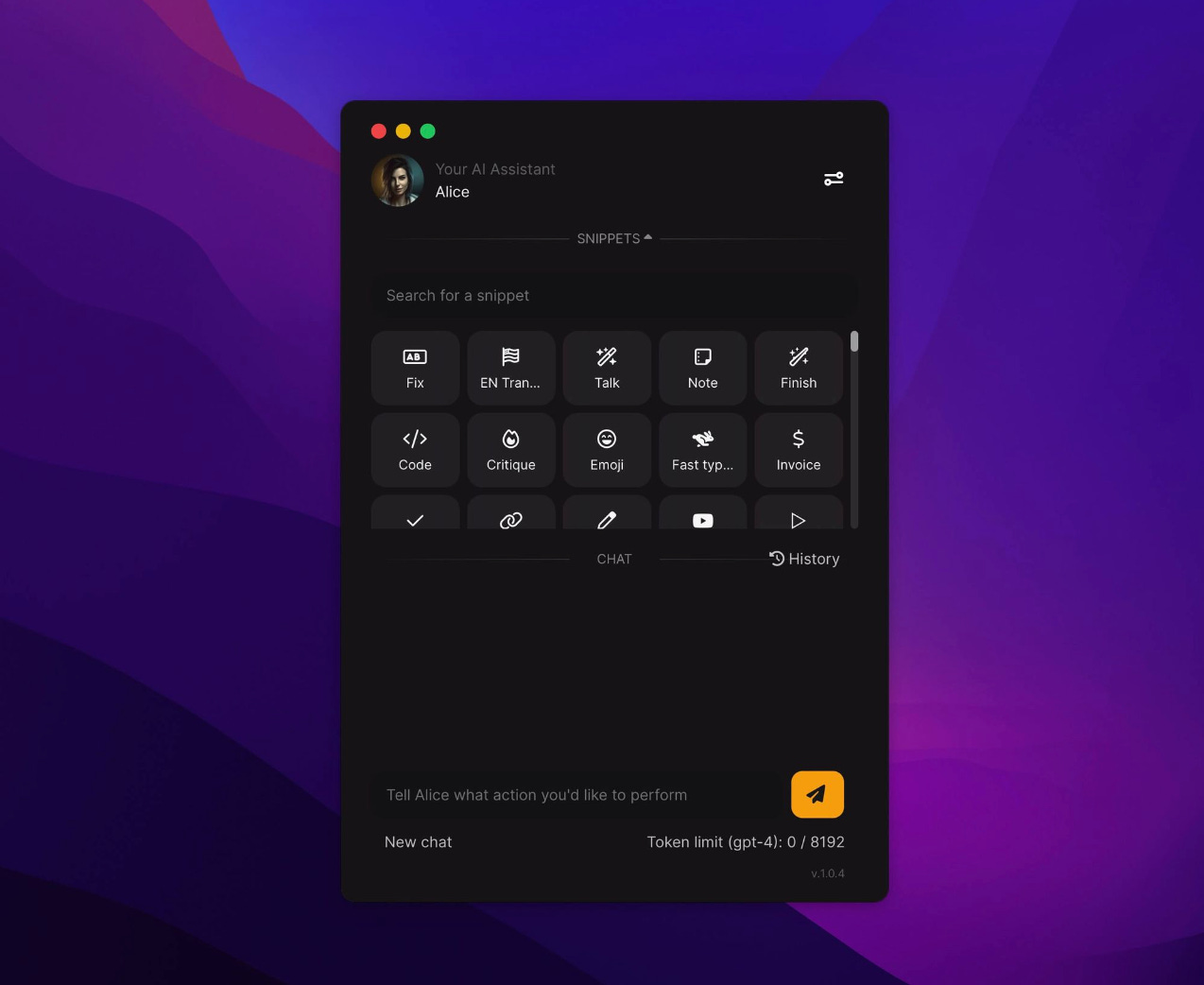

This is what the first Alice interface looked like, which I designed:

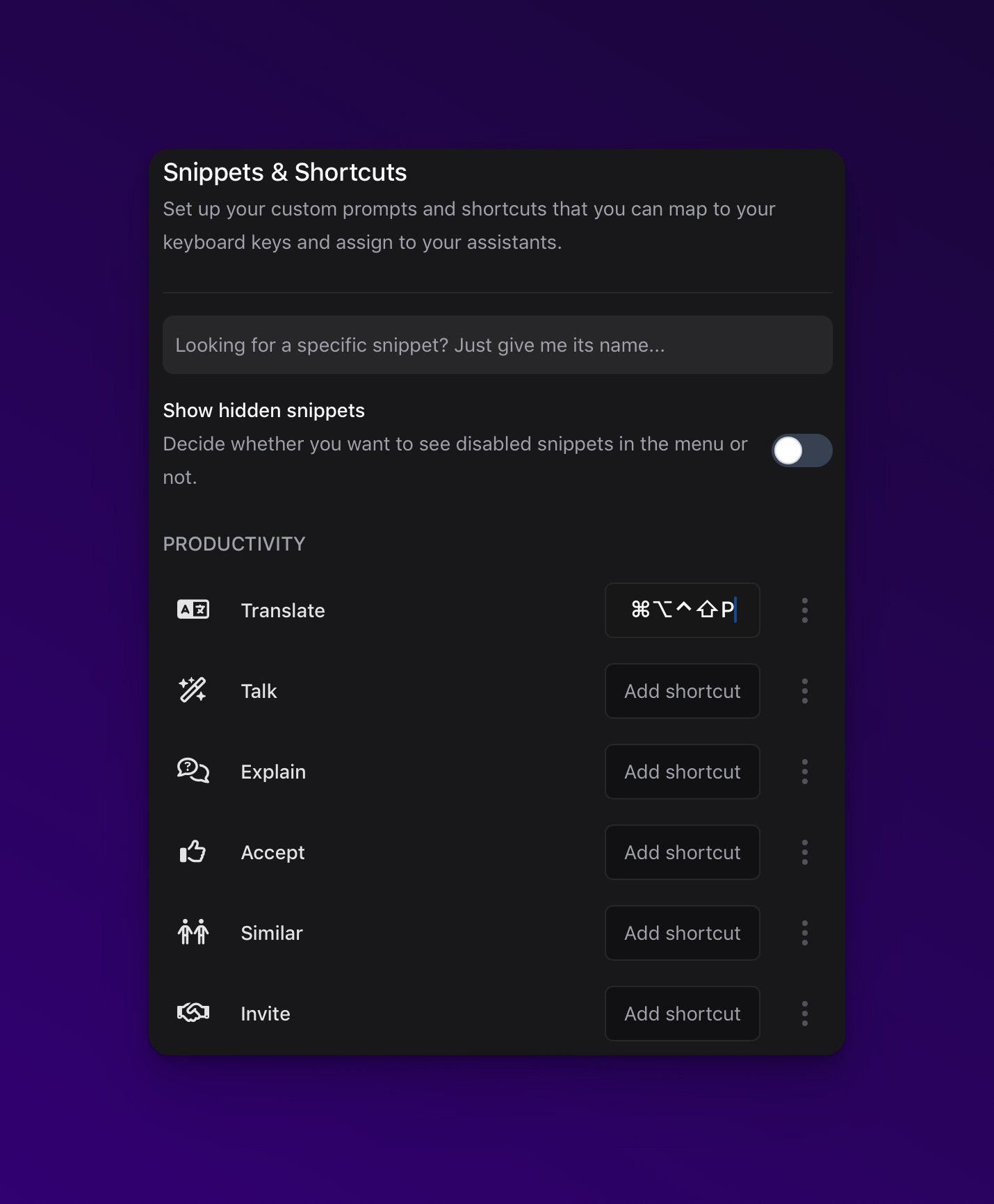

One of the key elements became Snippets — ready prompts that can also be invoked with a keyboard shortcut. This meant that we could easily create, for example, a Snippet that translated all content into Spanish, or one that corrected typos in the given text.

This is of course just the beginning of the fun. In Snippets, we also built in the function of remote actions, similar to those you can currently perform in GPTs. The idea was for the ready answer, instead of displaying it, Alice would send it directly to our automation. Thanks to this, a Snippet, which is essentially just such a prompt, could send such a formatted message to the webhook address, which was handled by our automation in make.com.

We connected a number of ready-made automations to Snippets and it quickly turned out that Alice is able to accept quite careless input and turn it into an automation input carefully tailored to our needs!

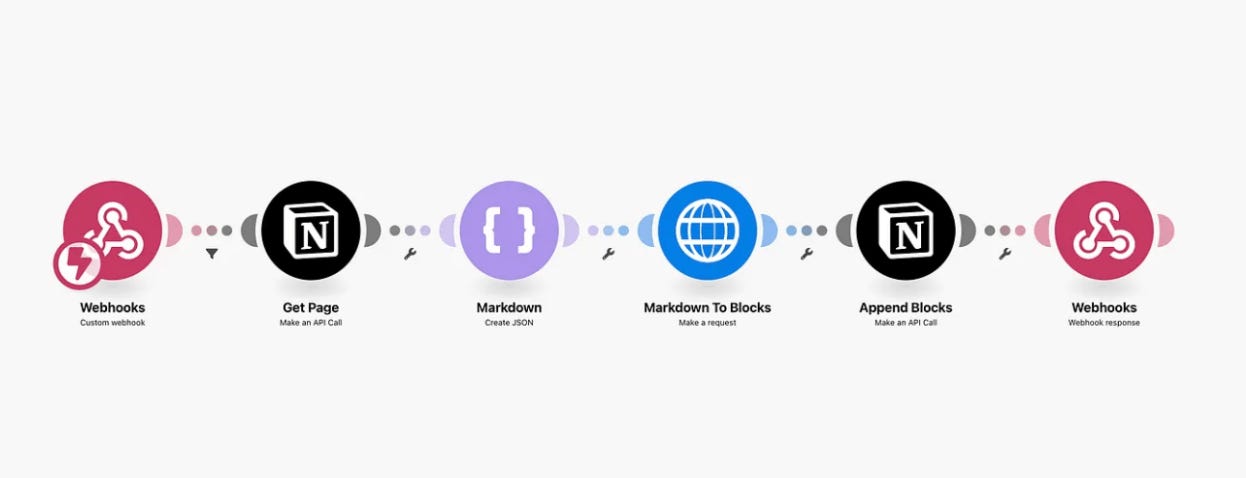

Here is an example of such a simple scenario in make.com, which took over these data and supplemented the CRM in Notion with customer data:

From there, the magic began to happen ✨

It quickly turned out that Alice is useful not only to us, but our teams started using it and gave us more and more feedback on improvements we could add to the application. The project, which started as our experiment, began to provide value to others. What's more, we ourselves started using it as one of the basic forms of interaction with LLM, especially since we could develop and test different models at will.

Over time, it turned out that the original interface needed to be redesigned to provide the greatest possible comfort of using AI on the desktop, as this environment is the best for work.

The point is that we started using Alice more and more often not only to launch automations, but instead of ChatGPT, as it turned out to be much more convenient.

There are other applications that offer the possibility of interacting with models, but often they are simply wrappers for ChatGPT, and alternatives like TypingMind, although they are developing nicely, the interface itself is not sufficiently well-developed to simply use the application pleasantly and quickly, which is a necessary condition for including it in our daily work, as a typical assistant. Not to mention that these applications are simply further UI variants of ChatGPT, devoid of the ability to perform actions and agency, which is so important in Alice.

So we made the decision to redesign the UI, taking into account not only remote actions but also user feedback, in order to work out the best possible version of interaction with LLM, supporting our productivity.

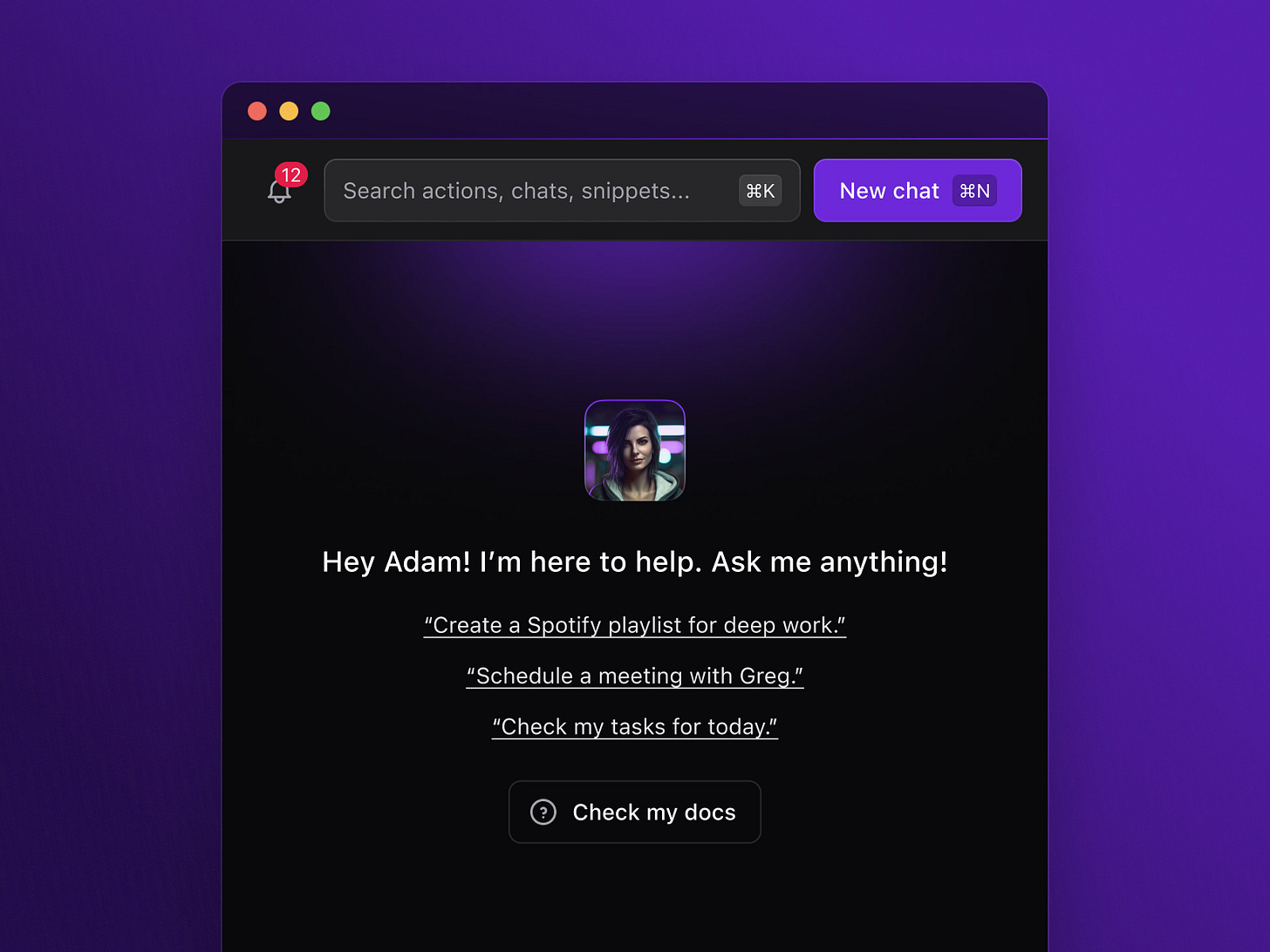

This is how the new version of Alice was created, which was helped to design by Mateusz Wierzbicki:

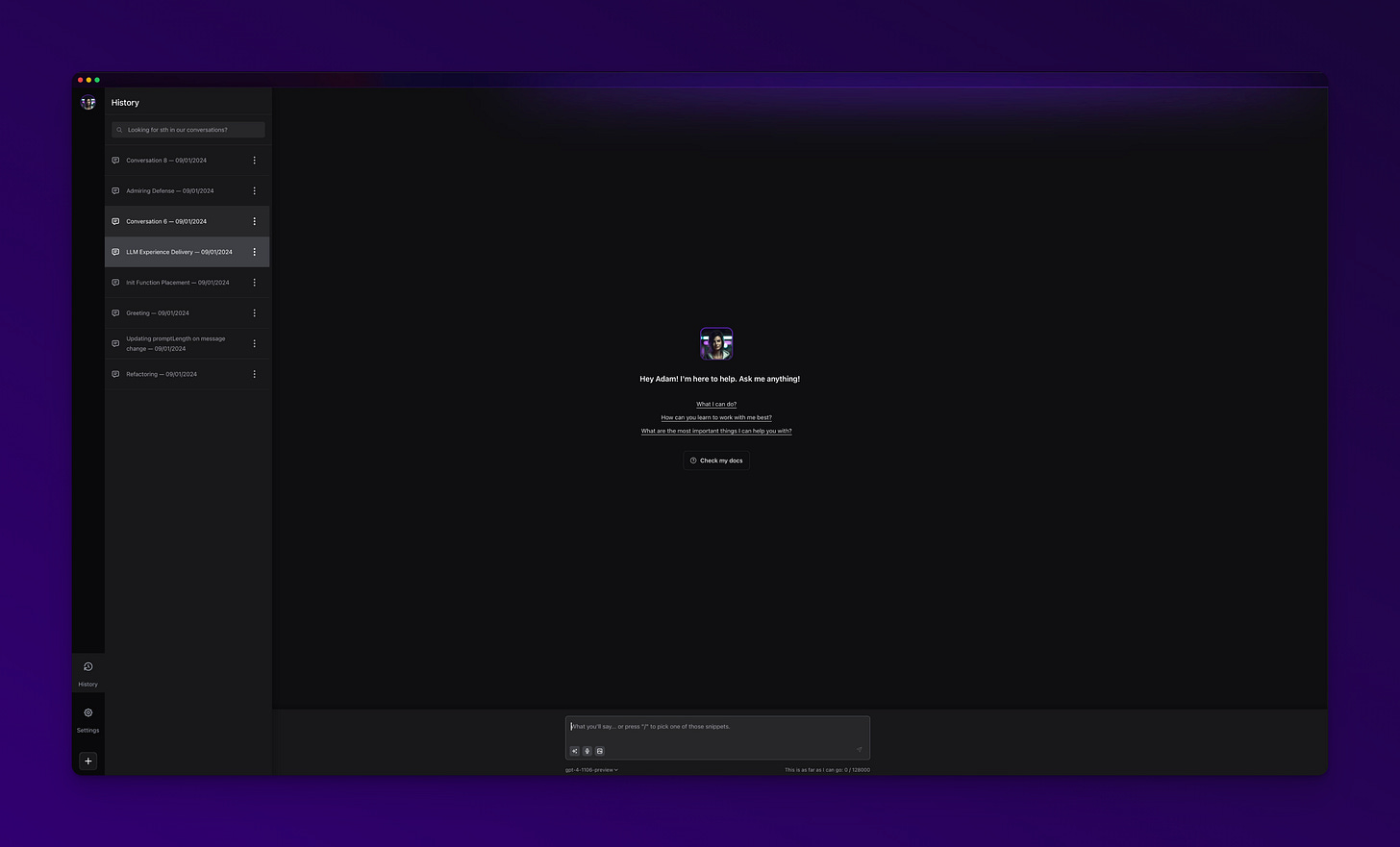

We thought through several modes in the application and designed the UI in such a way that it could actually be available as an assistant, anywhere and at arm’s length or… a keyboard shortcut. The conversation with Alice in the new version looks like this:

For me, it is the best and most fluid experience of conversation with artificial intelligence. The ability to change the model to any, for example, GPT-4 Turbo or GPT3-Turbo 16k, depending on the task we have to perform, allows for receiving answers so quickly that it is impossible to keep up with reading, which is just this boundary, in which interaction with LLM does not make us wait for anything. This is crucial in an interface that we expect to support productive work.

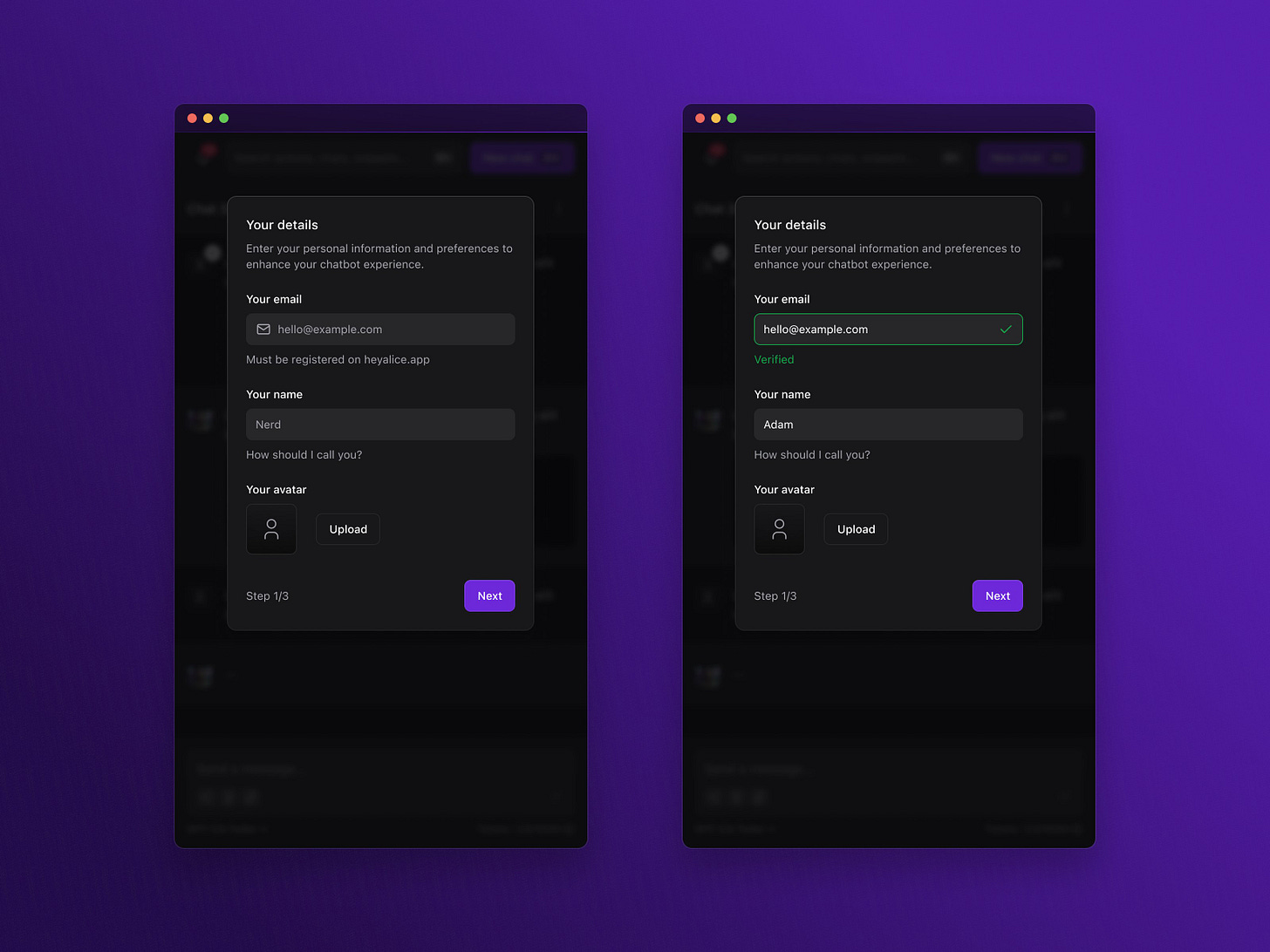

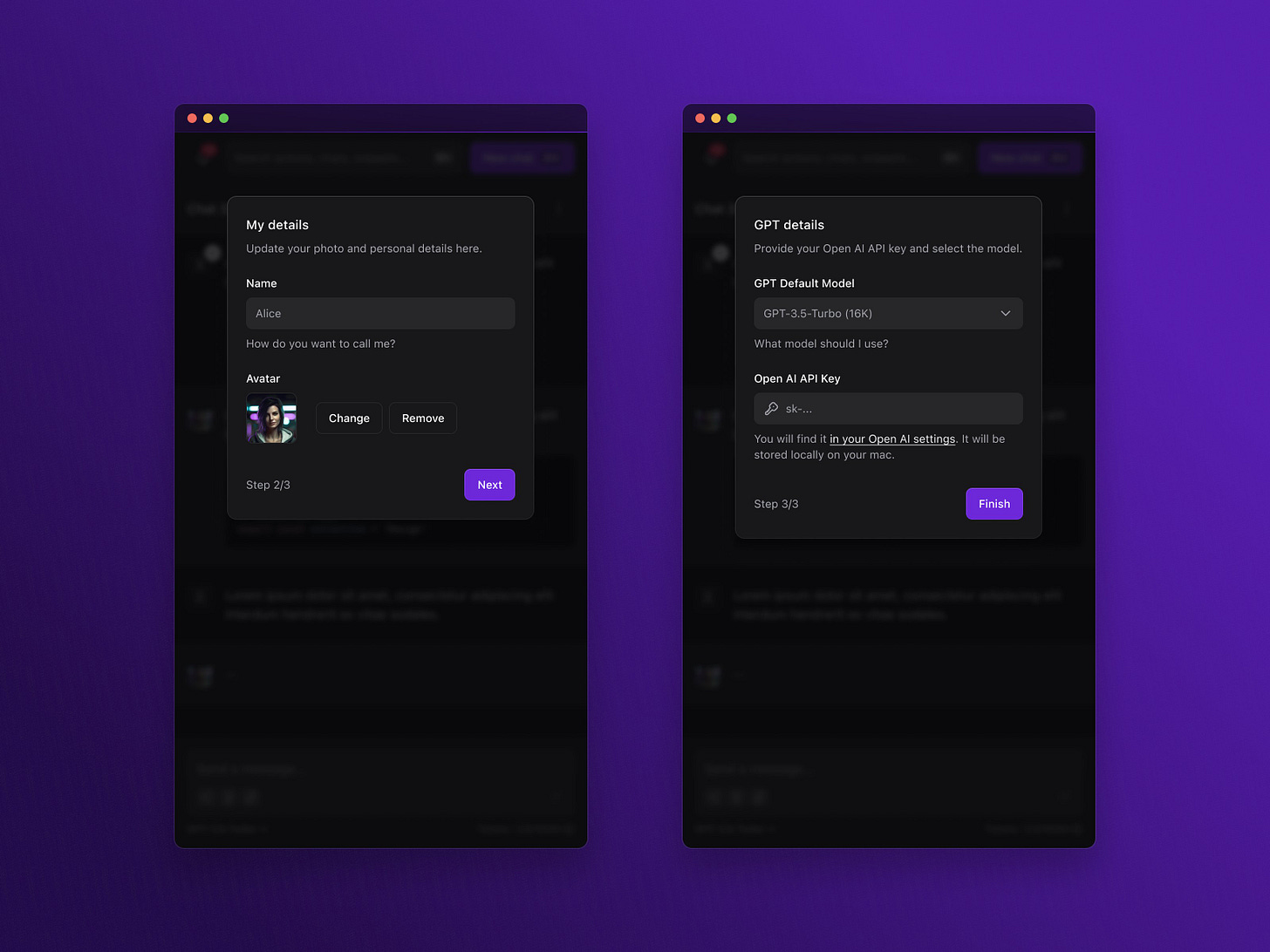

When launching Alice, we designed a simple onboarding that allows for customizing the application and entering the OpenAI API key.

Next, you have the opportunity to create your first, main assistant and choose the default version of the model for conversation:

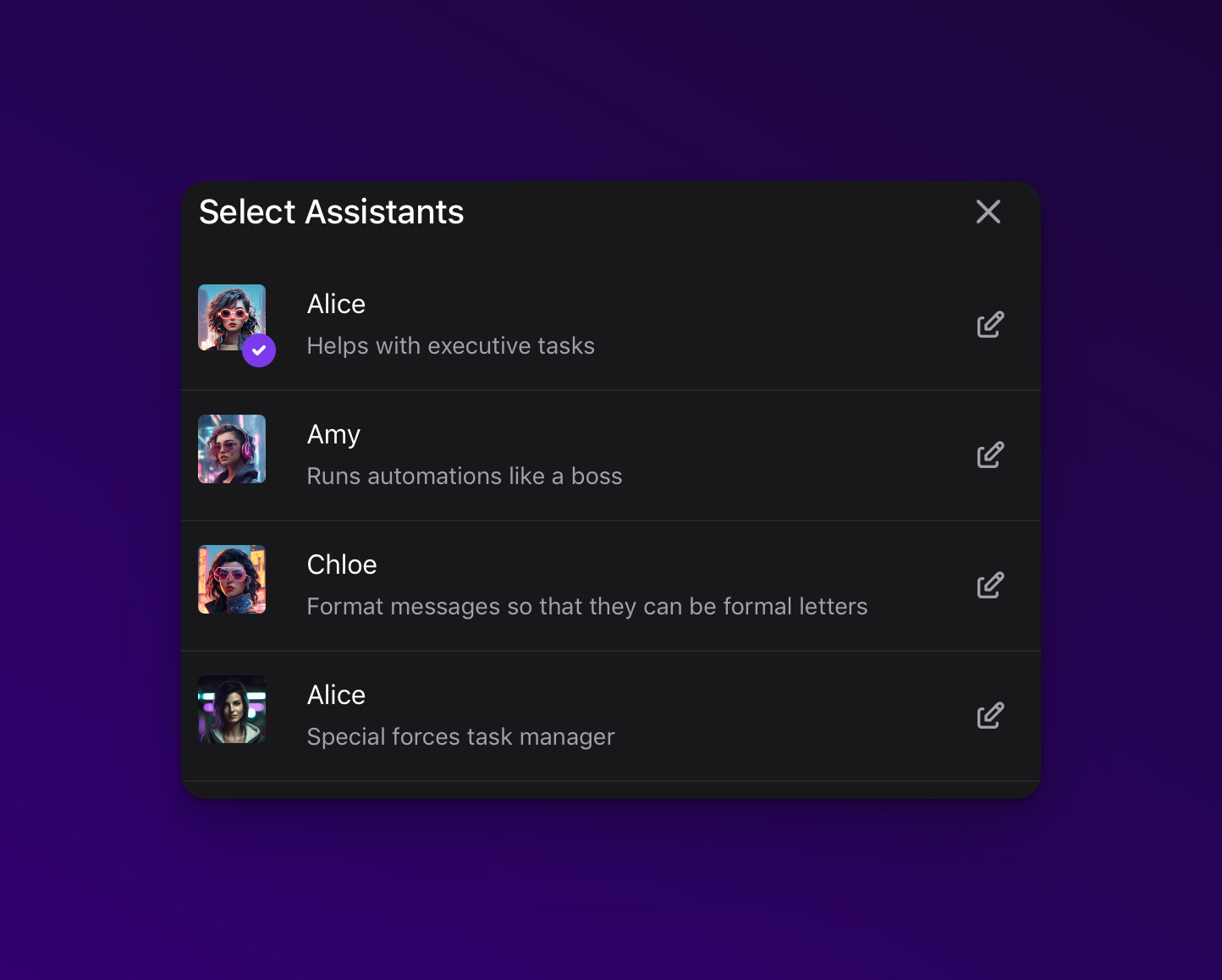

Why main? Because within Alice, I use many helpers - assistants, whose main prompt is tailored to a specific task I want to perform. They work similarly to GTPs in ChatGPT, but much faster and are at hand. Thanks to this, you can learn a new language with one assistant and brainstorm business ideas with another. Choosing an assistant in the UI is very simple, by clicking on the avatar I get their list, which allows for smooth switching.

The ability to use keyboard shortcuts to run a prompt on selected text and automatically copy the answer to the clipboard was a breakthrough for me in interacting with AI. The browser interface of ChatGPT is simply not convenient for this. Yes, it works well if creating some content is our only task, but generally our work just doesn't look like that. In most cases, we juggle various applications and want to quickly format, expand, or correct the currently edited content. In this case, copying it to ChatGPT and even preceding it with an instruction is simply completely inefficient!

That's why in Alice, keyboard shortcuts primarily allow you to call up the application at any time, but also run any Snippet (prompt) on selected text. We designed a convenient interface for defining Snippets and their shortcuts:

Shortcuts are also assigned to all important system functions of the application and they are easy to remember, as they appear next to the indicated action:

The situation is similar with working on files. ChatGPT currently allows uploading files and working with documents and even if we ignore the fact that most of the time it means unknown errors that it reports, undoubtedly such interaction with the model is very valuable. The problem is that the browser is not a good place to work with files, unlike the operating system, where we have everything at hand and don't have to wait to send the file to the server (not to mention the security issues of those files).

In the case of Alice, it's easy to imagine that working with files that we drop on the application can take place instantly, safely, because on our computer (and even very safely, if we use the offline model).

The entire interface was designed to be as compact as possible and encourage the user to use keyboard shortcuts. In this way, after just a few days of using Alice, it's simply hard to imagine working without it. However, we also prepared a view that allows for full-screen work, focusing on a specific conversation. This mode is similar to the ChatGPT interface and is useful when we want to work with the application itself for a longer time:

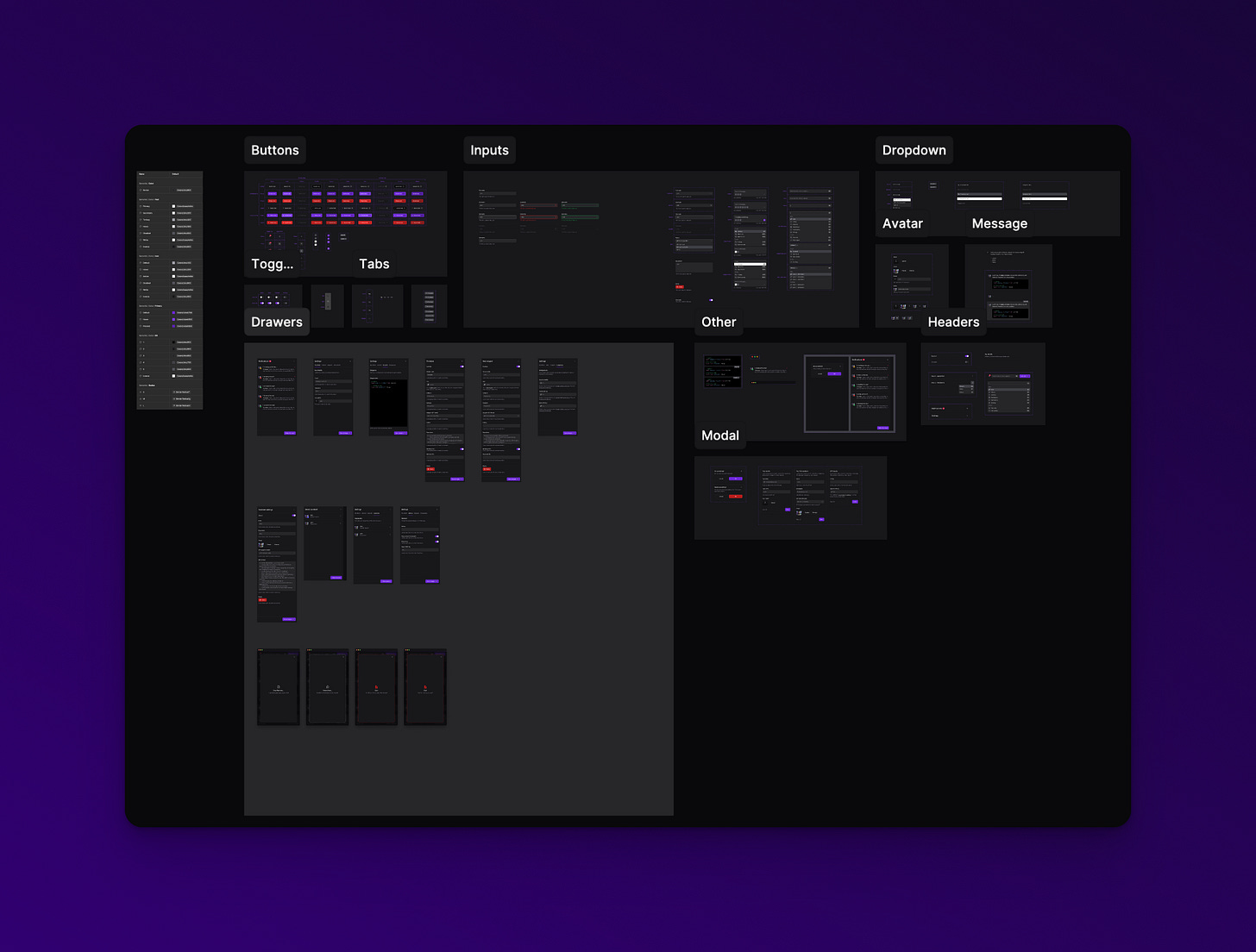

The design system of the Alice application was prepared in Figma and includes several hundred components:

We have been developing the Alice project for over a year, mainly for ourselves and our teams. Over time, we also made it available to people who joined our programs. We taught these people our approach to automation, so they could take advantage of Alice's unique capabilities and create their own actions. As a result, over 500 people currently use Alice almost daily, and another nearly 3000 are on the waiting list.

I still believe that Alice's greatest potential is precisely in launching actions and in this it is irreplaceable for us, but the new UI makes it also the fastest and most convenient way to interact with LLM, so we practically don't look into ChatGPT anymore.

It is possible that Alice with a new interface will also find more widespread use among people who are not proficient in automations, and working with LLM on a daily basis will significantly increase their productivity.

First of all, we plan to make Alice available to people who use the paid Tech⋅sistence subscription.

Then, we will gradually release access for people who participate in our programs, and are on the waiting list at heyalice.app and only later we plan to launch the application.

Throughout the year, Alice remained free and will remain so until its official premiere. In return, we only count on your feedback and help in the development and promotion of Alice.

To join the waiting list, go here »

Till next Thursday!

Greg

Amazing! I’d love to learn more about all of this 😉

Can't wait as my fresh assistance arrive to my home :) is just new girlfriend :):)