Big updates to Alice, plus a free trial ✨

This month, our AI Assistant project has made strides. To share our journey, here's a sneak peek at our progress and a limited 7-day free trial of Alice!

Lately, we've been tirelessly working on enhancing Alice and preparing it for a fresh release. Three significant features have been successfully integrated into this new version:

✅ Voice Actions have been improved ("save the book from a screen to my Readwise")

✅ Custom AI now allows you to incorporate your own, private back-end knowledge base

✅ The introduction of Claude 3.5 is indeed a sweet addition.

In celebration of these advancements, we're offering a unique opportunity to try the latest version of Alice, completely free (but you have to hurry as this ends on Sunday!).

You can download Alice here and initiate a 7-day free trial using our credits.

Additionally, you're welcome to bring your own API keys. Don't hesitate to give Alice a try now! Such opportunities don't come around often!

Ok, so let's explore what’s new in the latest and greatest version of Alice:

Voice Actions (beta)

TLDR; Voice Actions let you activate the microphone with a keyboard shortcut and generate a response based on Snippet instructions or the result of a Remote Action.

Remember Samantha (khem, Sky) from OpenAI's demo? 😉 Well, that went sideways. But we liked it! So here's another development of Voice Actions! Now, you can interact with Alice using just your voice. Leveraging OpenAI’s Whisper, your voice recordings are seamlessly converted to text, and responses from our language models are read back to you using advanced TTS (Text-to-Speech) technology.

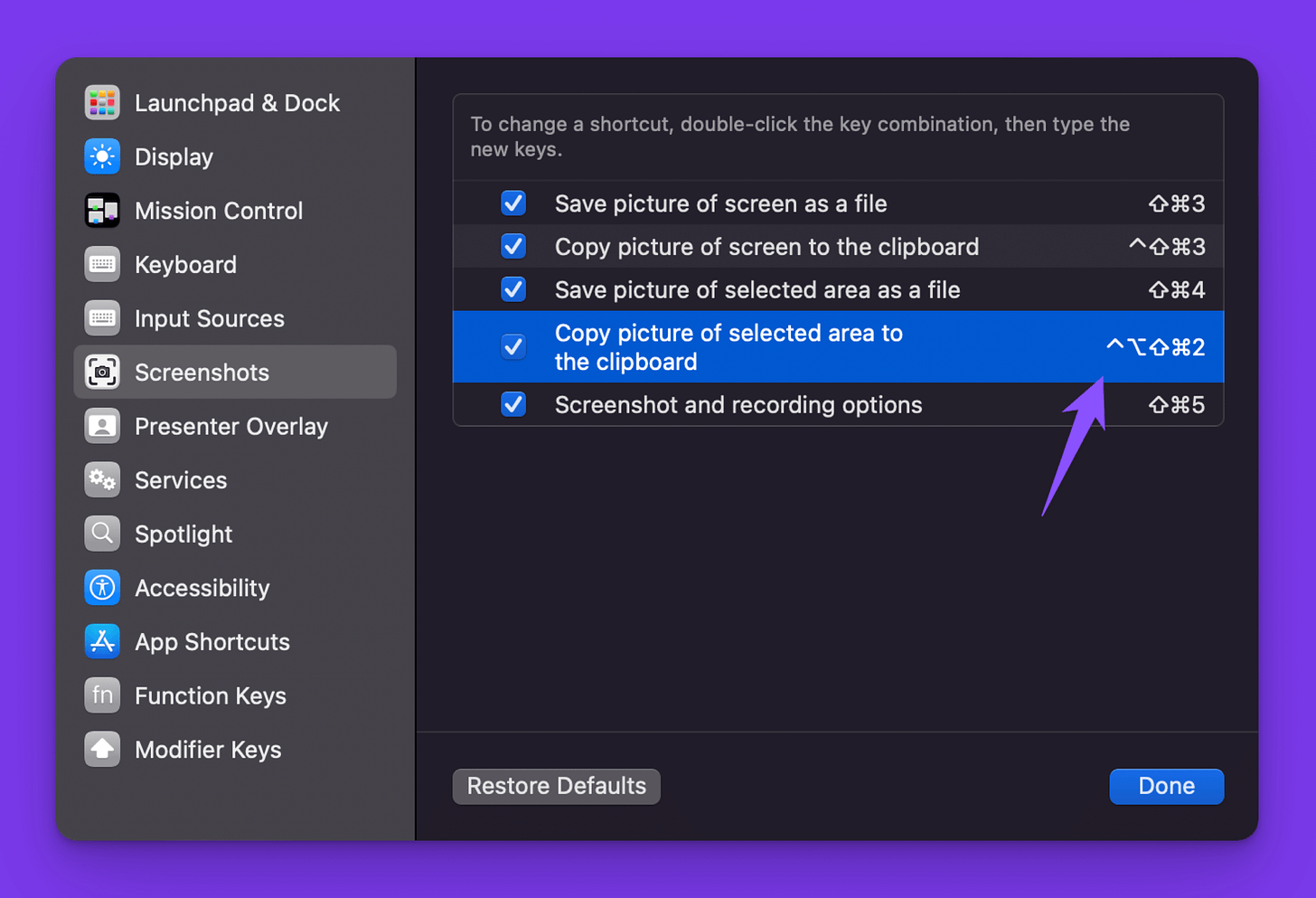

In practice, Voice Actions are an additional option for Snippets, which can be enabled in the Settings (Cmd+K → Settings). Recording will only be triggered when the Snippet is launched using a keyboard shortcut. Besides recording, it is also possible to include (or skip) the current contents of the system clipboard, which also supports copied images.

For convenient work with Snippets, set a keyboard shortcut to take a screenshot of a screen fragment and copy it to the clipboard.

Voice Actions also connect directly with Remote Actions. We can dictate a message, attach an image to it, and have Alice send the specified information to the automation scenario.

A practical example might be taking a screenshot fragment and asking a question about it. Below, Alice correctly read the structure of the automation scenario. It's important to be aware of the limitations of VLM (Vision Language Model) and multimodal models. In these, confabulation (or hallucination) and simple mistakes also occur. They do well with simple tasks, e.g., reading text or general image recognition.

Here's a quick video showcasing how it works:

Something else? We knew you’d ask! 😊 Using Voice Actions, we were able to:

Ask for explanations (like the explanation of complex scenarios)

Interpret results displayed on screens

Make calculations based on data from screenshots (please, double-check the results!)

Custom AI (experimental) 🤯

This is a big one for us. We're developing Alice to be the best front-end for any LLM use. And we know exactly how the future of work will look with apps like Alice. In fact, we already use it in our teams and know there's no going back.

So, what's the deal? Alice should be able to work with your company's data and connect to your custom AI and back-end. This means your entire knowledge base will be at your fingertips. Plus, you can limit access to data based on different company roles. Sounds insane, right? But wait, there's more! You'll also be able to use offline, safe, and private models to operate on these knowledge bases.

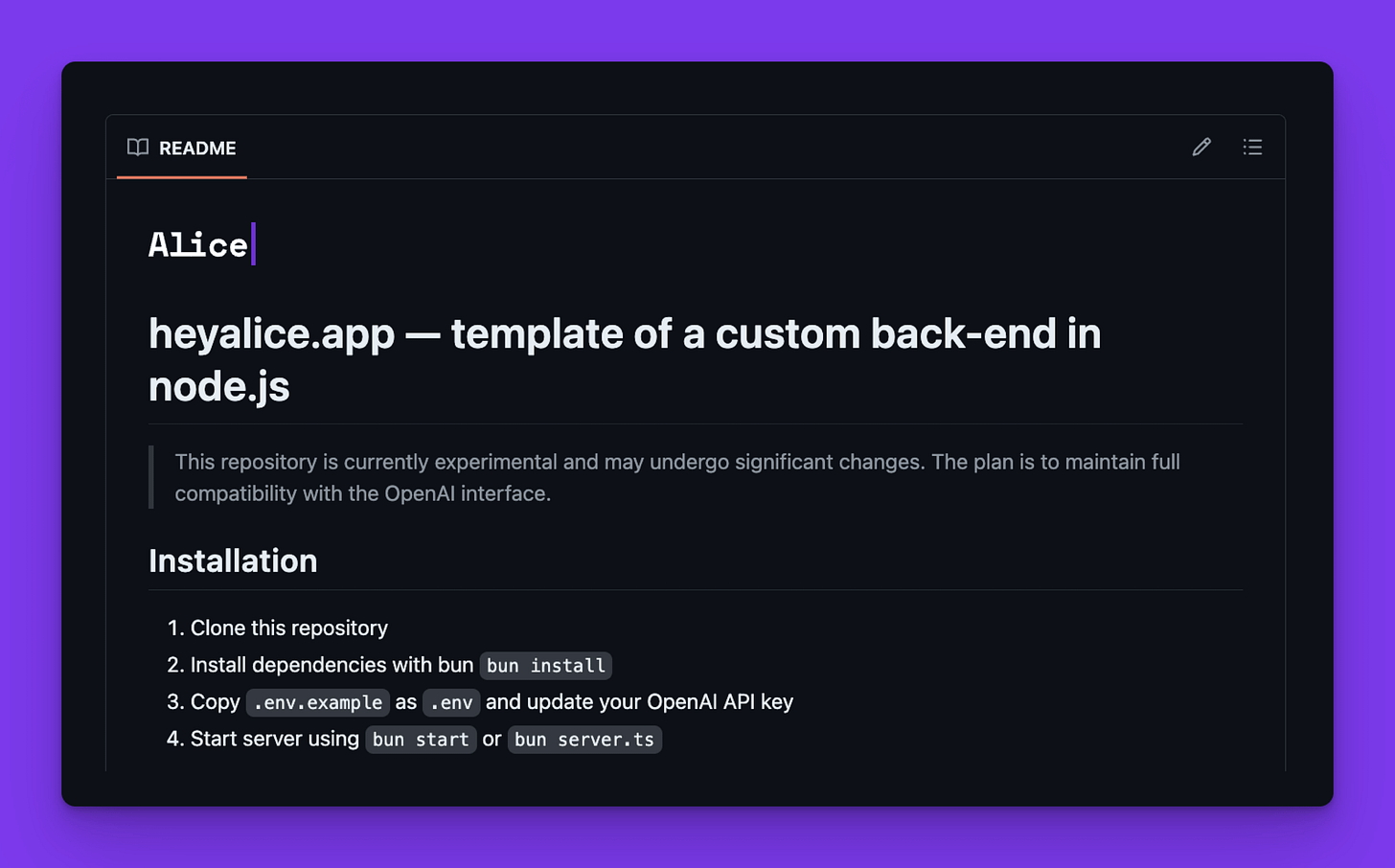

By default, Alice communicates directly with OpenAI, Anthropic, Groq, Perplexity, or Ollama servers. However, from now on, it is also possible to connect your own back-end application.

This opens up possibilities for:

Connecting your own knowledge base

Integrating your own logic for tool handling

Implementing advanced "AI Agents" logic

Connecting your own language models

This is probably great news for all of you with a bit more technical experience or those working in companies with back-end developers on board. In practice, this is not that scary, especially with an example of a server in Node.js technology can be found here.

IMPORTANT: This functionality is currently experimental and may not work correctly.

Go get it! Flash trial ends on Sunday ⚡

Typically, Alice isn't available for a trial run. This is primarily because we offer complimentary credits that come at a cost to us, and we strive to allocate ample time to support our valued clients.

However, we've decided to make a small exception because we genuinely want you and your friends to experience Alice. So, don't hold back. Grab your free trial, no strings attached - no personal details, credit cards, or any other unnecessary information required:

Starting my 7-day flash trial of Alice for free »

Simply download the version compatible with your operating system, and Alice will lead you through the rest of the process ⭐

One more thing…

We're trying really hard to manage our product and marketing time, effectively resulting in sitting nose-down in product development and having not-so-great marketing. This is why we struggle with the adoption of Alice.

And we need your help!

Please, share this link to a free trial with your friends:

https://www.heyalice.app/download

Or retweet my post on X. Or both for our ultimate gratitude 💜

Put it on your socials, send it in emails, send pigeons—please, do anything that might work. This is 5 minutes for you and means the world to us. And we'll all benefit if we have more resources to run this project.

THANK YOU SO MUCH!

All the best,

Greg

P.S.: We're still gathering your fantastic feedback from our recent post. Thank you immensely for all the kind words and suggestions. I'll compile all our learnings and future plans for our newsletter here on Substack, next week 💚 Until then!